OCaml Weekly News: OCaml Weekly News, 30 Apr 2024

- OCANNL 0.3.1: a from-scratch deep learning (i.e. dense tensor optimization) framework

- I roughly translated Real World OCaml's Async concurrency chapter to eio

- Using Property-Based Testing to Test OCaml 5

- OCaml Backtraces on Uncaught Exceptions, by OCamlPro

- OCaml Users on Windows: Please share your insights on our user survey

- Graphql_jsoo_client 0.1.0 - library for GraphQL clients using WebSockts

- dream-html 3.0.0

- DkCoder 0.2 - Scripting in OCaml

- Ocaml-protoc-plugin 6.1.0

- Other OCaml News

MetaFilter: Kiwi takes a nap in Far North woman's chicken coop

ScreenAnarchy: Brussels 2024 Interview: 4PM Stars Oh Dal-su, Jang Young-nam and Director Jay Song Discuss New Korean Thriller

Last week, the 42nd Brussels International Film Festival played host to the world premiere of The Nightmare director Jay Song’s new South Korean thriller, 4PM. Inspired by the Belgian novel “Les Catilinaires”, from celebrated author Amélie Nothomb, which was published in English as The Stranger Next Door, Brussels was a fitting venue to debut this new interpretation. ScreenAnarchy sat down with actors Oh Dal-su, Jang Young-nam, Kim Hong-pa and Gong Jae-kyung, as well as the film's director, Jay Song, the morning after the film's premiere. Renowned character actor Oh Dal-su lands a rare lead role as Jung-in, a philosophy professor who moves to the countryside on sabbatical with his wife Hyun-suk (Jang Young-nam). After settling into their new bucolic digs, the couple approach the...

Last week, the 42nd Brussels International Film Festival played host to the world premiere of The Nightmare director Jay Song’s new South Korean thriller, 4PM. Inspired by the Belgian novel “Les Catilinaires”, from celebrated author Amélie Nothomb, which was published in English as The Stranger Next Door, Brussels was a fitting venue to debut this new interpretation. ScreenAnarchy sat down with actors Oh Dal-su, Jang Young-nam, Kim Hong-pa and Gong Jae-kyung, as well as the film's director, Jay Song, the morning after the film's premiere. Renowned character actor Oh Dal-su lands a rare lead role as Jung-in, a philosophy professor who moves to the countryside on sabbatical with his wife Hyun-suk (Jang Young-nam). After settling into their new bucolic digs, the couple approach the...

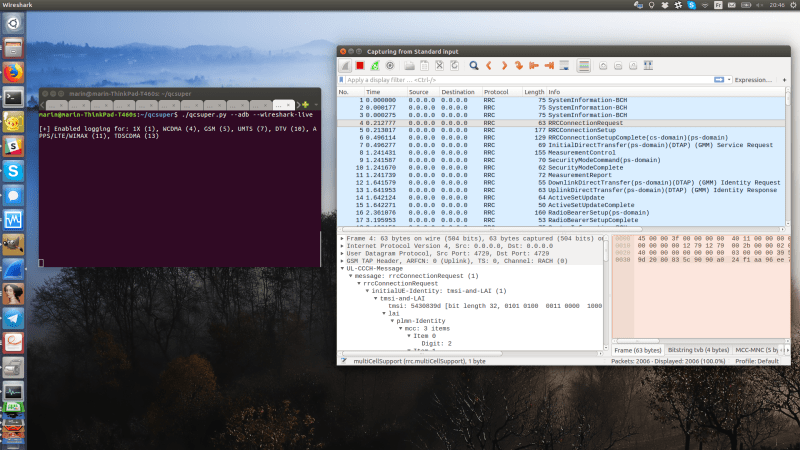

Hackaday: Turn Your Qualcomm Phone Or Modem Into Cellular Sniffer

If your thought repurposing DVB-T dongles for generic software defined radio (SDR) use was cool, wait until you see QCSuper, a project that re-purposes phones and modems to capture raw 2G/3G/4G/5G. You have to have a Qualcomm-based device, it has to either run rooted Android or be a USB modem, but once you find one in your drawers, you can get a steady stream of packets straight into your Wireshark window. No more expensive SDR requirement for getting into cellular sniffing – at least, not unless you are debugging some seriously low-level issues.

It appears there’s a Qualcomm specific diagnostic port you can access over USB, that this software can make use of. The 5G capture support is currently situational, but 2G/3G/4G capabilities seem to be pretty stable. And there’s a good few devices in the “successfully tested” list – given the way this software functions, chances are, your device will work! Remember to report whether it does or doesn’t, of course. Also, the project is seriously rich on instructions – whether you’re using Linux or Windows, it appears you won’t be left alone debugging any problems you might encounter.

This is a receive-only project, so, legally, you are most likely allowed to have fun — at least, it would be pretty complicated to detect that you are, unlike with transmit-capable setups. Qualcomm devices have pretty much permeated our lives, with Qualcomm chips nowadays used even in the ever-present SimCom modules, like the modems used in the PinePhone. Wondering what a sniffer could be useful for? Well, for one, if you ever need to debug a 4G base station you’ve just set up, completely legally, of course.

Recent additions: lsp-types 2.2.0.0

Haskell library for the Microsoft Language Server Protocol, data types

Recent additions: lsp-test 0.17.0.1

Functional test framework for LSP servers.

Recent additions: lsp 2.5.0.0

Haskell library for the Microsoft Language Server Protocol

Slashdot: NASA's Psyche Hits 25 Mbps From 140 Miles Away

Read more of this story at Slashdot.

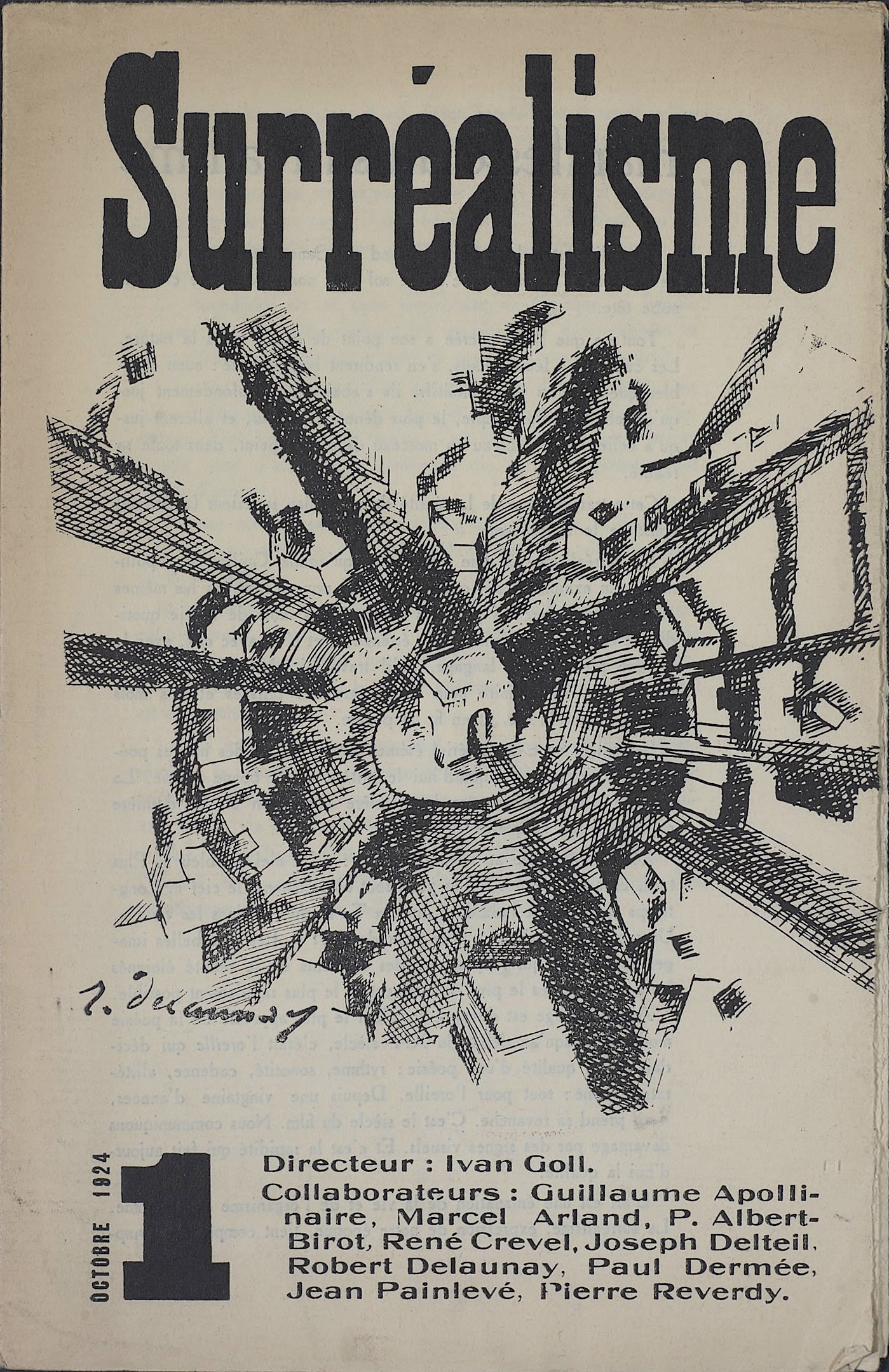

Open Culture: André Breton’s Surrealist Manifesto Turns 100 This Year

People don’t seem to write a lot of manifestos these days. Or if they do write manifestos, they don’t make the impact that they would have a century ago. In fact, this year marks the hundredth anniversary of the Manifeste du surréalisme, or Surrealist Manifesto, one of the most famous such documents. Or rather, it was two of the most famous such documents, each of them written by a different poet. On October 1, 1924, Yvan Goll published a manifesto in the name of the surrealist artists who looked to him as a leader (including Dada Manifesto author Tristan Tzara). Two weeks later, André Breton published a manifesto — the first of three — representing his own, distinct, group of surrealists with the very same title.

Though Goll may have beaten him to the punch, we can safely say, at a distance of one hundred years, that Breton wrote the more enduring manifesto. You can read it online in the original French as well as in English translation, but before you do, consider watching this short France 24 English documentary on its importance, as well as that of the surrealist art movement that it set off.

“There’s day-to-day reality, and then there’s superior reality,” says its narrator. “That’s what André Breton’s Surrealist Manifesto was aiming for: an artistic and spiritual revolution” driven by the rejection of “reason, logic, and even language, all of which its acolytes believed obscured deeper, more mystical truths.”

“The realistic attitude, inspired by positivism, from Saint Thomas Aquinas to Anatole France, clearly seems to me to be hostile to any intellectual or moral advancement,” the trained doctor Breton declares in the manifesto. “I loathe it, for it is made up of mediocrity, hate, and dull conceit. It is this attitude which today gives birth to these ridiculous books, these insulting plays.” He might well have also seen it as giving rise to events like the First World War, whose grinding senselessness he witnessed working in a neurological ward and carrying stretchers off the battlefield. It was these experiences that directly or indirectly inspired a wave of avant-garde twentieth-century art, more than a few pieces of which startle us even today — which is saying something, given our daily diet of absurdities in twenty-first century life.

Related content:

An Introduction to Surrealism: The Big Aesthetic Ideas Presented in Three Videos

A Brief, Visual Introduction to Surrealism: A Primer by Doctor Who Star Peter Capaldi

The Forgotten Women of Surrealism: A Magical, Short Animated Film

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities, the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.

Recent CPAN uploads - MetaCPAN: Data-TableReader-Decoder-HTML-0.020

HTML support for Data::TableReader

Changes for 0.020 - 2024-04-30

- New attribute Iterator->dataset_idx

- Fix bug where next_dataset returned true after the final table

Recent CPAN uploads - MetaCPAN: Data-TableReader-Decoder-HTML-0.017

Recent CPAN uploads - MetaCPAN: Data-TableReader-0.020

Locate and read records from human-edited data tables (Excel, CSV)

Changes for 0.020 - 2024-04-30

- Rename on_validation_fail -> on_validation_error The action codes are the same, but the callback has different arguments. Old callbacks applied using the attribute name 'on_validation_fail' will continue to work.

- New iterator attribute 'dataset_idx', for keeping track of which dataset you're on.

- Unimplemented Iterator->seek now dies as per the documentation. (no built-in iterator lacked support for seek, so unlikely to matter)

MetaFilter: simultaneously beloved and overlooked

Poet's Poet: Hoa Nguyen Alongside Lorine Niedecker by Hoa Nguyen Another Long Stretch of Geologic Time: Lorine Niedecker's "Lake Superior" by Sasha Steensen Pumps and Plovers: Lorine Niedecker and the Critique of Cybernetic Ecology by Samia Rahimitoola Niedecker's New Goose: Settler Colonialism on the Cusp of the Great Acceleration by Michelle Niemann Cleaning Women: Occupational Health and Broken Solidarities by Sarah Dimick Rueful Proximities: Lorine Niedecker, Queer Affection, and Lyric Drag by Brandon Menke The Marsh by et. al. Lorine Niedecker, previously

Open Culture: Behold The Drawings of Franz Kafka (1907–1917)

Runner 1907–1908

UK-born, Chicago-based artist Philip Hartigan has posted a brief video piece about Franz Kafka’s drawings. Kafka, of course, wrote a body of work, mostly never published during his lifetime, that captured the absurdity and the loneliness of the newly emerging modern world: In The Metamorphosis, Gregor transforms overnight into a giant cockroach; in The Trial, Josef K. is charged with an undefined crime by a maddeningly inaccessible court. In story after story, Kafka showed his protagonists getting crushed between the pincers of a faceless bureaucratic authority on the one hand and a deep sense of shame and guilt on the other.

On his deathbed, the famously tortured writer implored his friend Max Brod to burn his unpublished work. Brod ignored his friend’s plea and instead published them – novels, short stories and even his diaries. In those diaries, Kafka doodled incessantly – stark, graphic drawings infused with the same angst as his writing. In fact, many of these drawings have ended up gracing the covers of Kafka’s books.

“Quick, minimal movements that convey the typical despairing mood of his fiction” says Hartigan of Kafka’s art. “I am struck by how these simple gestures, these zigzags of the wrist, contain an economy of mark making that even the most experienced artist can learn something from.”

In his book Conversations with Kafka, Gustav Janouch describes what happened when he came upon Kafka in mid-doodle: the writer immediately ripped the drawing into little pieces rather than have it be seen by anyone. After this happened a couple times, Kafka relented and let him see his work. Janouch was astonished. “You really didn’t need to hide them from me,” he complained. “They’re perfectly harmless sketches.”

“Kafka slowly wagged his head to and fro – ‘Oh no! They are not as harmless as they look. These drawing are the remains of an old, deep-rooted passion. That’s why I tried to hide them from you…. It’s not on the paper. The passion is in me. I always wanted to be able to draw. I wanted to see, and to hold fast to what was seen. That was my passion.”

Check out some of Kafka’s drawings below. Or definitely see the recently-published edition, Franz Kafka: The Drawings. It’s the “first book to publish the entirety of Franz Kafka’s graphic output, including more than 100 newly discovered drawings.”

Horse and Rider 1909–1910

Three Runners 1912–1913

The Thinker 1913

Fencing 1917

If you would like to sign up for Open Culture’s free email newsletter, please find it here. Or follow our posts on Threads, Facebook, BlueSky or Mastodon.

If you would like to support the mission of Open Culture, consider making a donation to our site. It’s hard to rely 100% on ads, and your contributions will help us continue providing the best free cultural and educational materials to learners everywhere. You can contribute through PayPal, Patreon, and Venmo (@openculture). Thanks!

Related Content:

Vladimir Nabokov’s Delightful Butterfly Drawings

The Art of William Faulkner: Drawings from 1916–1925

The Drawings of Jean-Paul Sartre

Flannery O’Connor’s Satirical Cartoons: 1942–1945

Jonathan Crow is a Los Angeles-based writer and filmmaker whose work has appeared in Yahoo!, The Hollywood Reporter, and other publications. You can follow him at @jonccrow.

Hackaday: Squeeze Another Drive into a Full-Up NAS

A network-attached storage (NAS) device is a frequent peripheral in home and office networks alike, yet so often these devices come pre-installed with a proprietary OS which does not lend itself to customization. [Codedbearder] had just such a NAS, a Terramaster F2-221, which while it could be persuaded to run a different OS, couldn’t do so without an external USB hard drive. Their solution was elegant, to create a new backplane PCB which took the same space as the original but managed to shoehorn in a small PCI-E solid-state drive.

The backplane rests in a motherboard connector which resembles a PCI-E one but which carries a pair of SATA interfaces. Some investigation reveals it also had a pair of PCI-E lanes though, so after some detective work to identify the pinout there was the chance of using those. A new PCB was designed, cleverly fitting an M.2 SSD exactly in the space between two pieces of chassis, allowing the boot drive to be incorporated without annoying USB drives. The final version of the board looks for all the world as though it was meant to be there from the start, a truly well-done piece of work.

Of course, if off-the-shelf is too easy for you, you can always build your own NAS.

Slashdot: Russia Clones Wikipedia, Censors It, Bans Original

Read more of this story at Slashdot.

Recent CPAN uploads - MetaCPAN: Perl-Tokenizer-0.11

A tiny Perl code tokenizer.

Changes for 0.11 - 2024-04-30

- bin/pl2html: generate smaller output.

- Minor documentation improvements.

- Meta updates.

Recent additions: netw 0.1.1.0

Binding to C socket API operating on bytearrays.

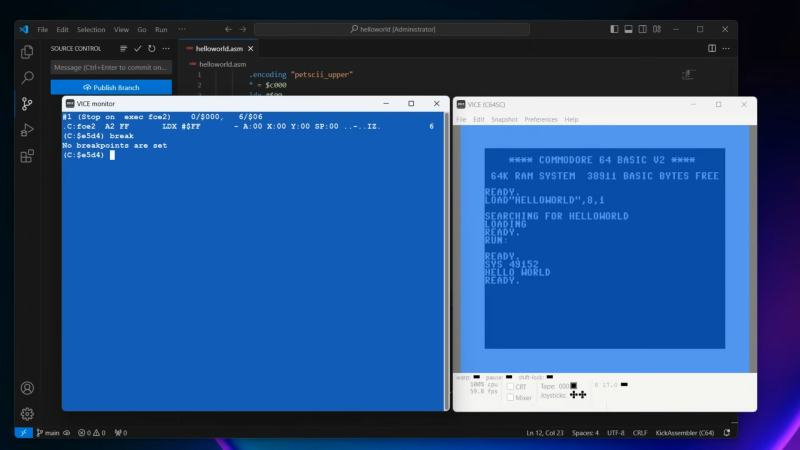

Hackaday: You Can Use Visual Studio Code To Write Commodore 64 Assembly

Once upon a time, you might have developed for the Commodore 64 using the very machine itself. You’d use the chunky old keyboard, a tape drive, or the 1541 disk drive if you wanted to work faster. These days, though, we have more modern tools that provide a much more comfortable working environment. [My Developer Thoughts] has shared a guide on how to develop for the Commodore 64 using Visual Studio Code on Windows 11.

The video starts right at the beginning from a fresh Windows install, assuming you’ve got no dev tools to start with. It steps through installing git, Java, Kick Assembler, and Visual Studio Code. Beyond that, it even explains how to use these tools in partnership with VICE – the Versatile Commodore Emulator. That’s a key part of the whole shebang—using an emulator on the same machine is a far quicker way to develop than using real Commodore hardware. You can always truck your builds over to an actual C64 when you’ve worked the bugs out!

It’s a great primer for anyone who is new to C64 development and doesn’t know where to start. Plus, we love the idea of bringing modern version control and programming techniques to this ancient platform. Video after the break.

[Thanks to Stephen Walters for the tip!]

Disquiet: Taylor Deupree’s Loop of Loops

I love record albums, certainly, but in 2024, as for many years now, there’s nothing for me quite like fragments posted by musicians online as they work toward a finished work. The word “work” appears twice in that previous sentence, eventually as a synonym for a fixed document, but first as the effort it took to get there. You can hear that sort of effort in an untitled track that Taylor Deupree just posted in his newsletter, which is titled The Imperfect. The recording is just under three minutes of looping drones. Per the brief description, there are two loops: “loop a / Arp2600, pitch pipe, wooden abacus → strymon volante → meris mercury x / loop b / kaleidoloop.” If the words aren’t familiar, a quick search online will reveal the instruments being described. What matters is the result, a kind of lush, syrupy stasis, the sonic equivalent of a nearly blank mind that is stuck on something ponderous, but not uncomfortable with the mental obstacle. It’s a beautiful little treat. The audio is only in Deupree’s newsletter, so you’ll need to click through to listen.

Slashdot: G7 Reaches Deal To Exit From Coal By 2035

Read more of this story at Slashdot.

Slashdot: Tether Buys $200 Million Majority Stake In Brain-Computer Interface Company

Read more of this story at Slashdot.

Hackaday: Sound and Water Make Weird Vibes in Microgravity

NASA astronaut [Don Pettit] shared a short video from an experiment he performed on the ISS back in 2012, demonstrating the effects of sound waves on water in space. Specifically, seeing what happens when a sphere of water surrounding an air bubble perched on a speaker cone is subjected to a variety of acoustic waves.

The result is visually striking patterns across different parts of the globe depending on what kind of sound waves were created. It’s a neat visual effect, and there’s more where that came from.

[Don] experimented with music as well as plain tones, and found that cello music had a particularly interesting effect on the setup. Little drops of water would break off from inside the sphere and start moving around the inside of the air bubble when cello music was played. You can see this in action as part of episode 160 from SmarterEveryDay (cued up to 7:51) which itself is about exploring the phenomenon of how water droplets can appear to act in an almost hydrophobic way.

This isn’t the first time water and sound collide in visually surprising ways. For example, check out the borderline optical illusion that comes from pouring water past a subwoofer emitting 24 Hz while the camera captures video at 24 frames per second.

Slashdot: T2 Linux 24.5 Released

Read more of this story at Slashdot.

Recent additions: national-australia-bank 0.0.5

Functions for National Australia Bank transactions

The Universe of Discourse: Hawat! Hawat! Hawat! A million deaths are not enough for Hawat!

[ Content warning: Spoilers for Frank Herbert's novel Dune. Conversely none of this will make sense if you haven't read it. ]

Summary: Thufir Hawat is the real traitor. He set up Yueh to take the fall.

This blog post began when I wondered:

Hawat knows that Wellington Yueh has, or had a wife, Wanna. She isn't around. Hasn't he asked where she is?

In fact she is (or was) a prisoner of the Harkonnens and the key to Yueh's betrayal. If Hawat had asked the obvious question, he might have unraveled the whole plot.

But Hawat is a Mentat, and the Master of Assassins for a Great House. He doesn't make dumbass mistakes like forgetting to ask “what are the whereabouts of the long-absent wife of my boss's personal physician?”

The Harkonnens nearly succeed in killing Paul, by immuring an agent in the Atreides residence six weeks before Paul even moves in. Hawat is so humiliated by his failure to detect the agent hidden in the wall that he offers the Duke his resignation on the spot. This is not a guy who would have forgotten to investigate Yueh's family connections.

And that wall murder thing wasn't even the Harkonnens' real plan! It was just a distraction:

"We've arranged diversions at the Residency," Piter said. "There'll be an attempt on the life of the Atreides heir — an attempt which could succeed."

"Piter," the Baron rumbled, "you indicated —"

"I indicated accidents can happen," Piter said. "And the attempt must appear valid."

Piter de Vries was so sure that Hawat would find the agent in the wall, he was willing to risk spoiling everything just to try to distract Hawat from the real plan!

If Hawat was what he appeared to be, he would never have left open the question of Wanna's whereabouts. Where is she? Yueh claimed that she had been killed by the Harkonnens, and Jessica offers that as a reason that Yueh can be trusted.

But the Bene Gesserit have a saying: “Do not count a human dead until you've seen his body. And even then you can make a mistake.” The Mentats must have a similar saying. Wanna herself was Bene Gesserit, who are certainly human and notoriously difficult to kill. She was last known to be in the custody of the Harkonnens. Why didn't Hawat consider the possibility that Wanna might not be dead, but held hostage, perhaps to manipulate Duke Leto's physician and his heir's tutor — as in fact she was? Of course he did.

"Not to mention that his wife was a Bene Gesserit slain by the Harkonnens," Jessica said.

"So that’s what happened to her," Hawat said.

There's Hawat, pretending to be dumb.

Supposedly Hawat also trusted Yueh because he had received Imperial Conditioning, and as Piter says, “it's assumed that ultimate conditioning cannot be removed without killing the subject”. Hawat even says to Jessica: “He's conditioned by the High College. That I know for certain.”

Okay, and? Could it be that Thufir Hawat, Master of Assassins, didn't consider the possibility that the Imperial Conditioning could be broken or bent? Because Piter de Vries certainly did consider it, and he was correct. If Piter had plotted to subvert Imperial Conditioning to gain an advantage for his employer, surely Hawat would have considered the same.

Notice, also, what Hawat doesn't say to Jessica. He doesn't say that Yueh's Imperial Conditioning can be depended on, or that Yueh is trustworthy. Jessica does not have the gift of the full Truthsay, but it is safest to use the truth with her whenever possible. So Hawat misdirects Jessica by saying merely that he knows that Yueh has the Conditioning.

Yueh gave away many indications of his impending betrayal, which would have been apparent to Hawat. For example:

Paul read: […]

"Stop it!" Yueh barked.

Paul broke off, stared at him.

Yueh closed his eyes, fought to regain composure. […]

"Is something wrong?" Paul asked.

"I'm sorry," Yueh said. "That was … my … dead wife's favorite passage."

This is not subtle. Even Paul, partly trained, might well have detected Yueh's momentary hesitation before his lie about Wanna's death. Paul detects many more subtle signs in Yueh as well as in others:

"Will there be something on the Fremen?" Paul asked.

"The Fremen?" Yueh drummed his fingers on the table, caught Paul staring at the nervous motion, withdrew his hand.

Hawat the Mentat, trained for a lifetime in observing the minutiae of other people's behavior, and who saw Yueh daily, would surely have suspected something.

So, Hawat knew the Harkonnens’ plot: Wanna was their hostage, and they were hoping to subvert Yueh and turn him to treason. Hawat might already have known that the Imperial Conditioning was not a certain guarantee, but at the very least he could certainly see that the Harkonnens’ plan depended on subverting it. But he lets the betrayal go ahead. Why? What is Hawat's plan?

Look what he does after the attack on the Atreides. Is he killed in the attack, as so many others are? No, he survives and immediately runs off to work for House Harkonnen.

Hawat might have had difficulty finding a new job — “Say aren't you the Master of Assassins whose whole house was destroyed by their ancient enemies? Great, we'll be in touch if we need anyone fitting that description.” But Vladimir Harkonnen will be glad to have him, because he was planning to get rid of Piter and would soon need a new Mentat, as Hawat presumably knoew or guessed. And also, the Baron would enjoy having someone around to remind him of his victory over the Atreides, which Hawat also knows.

Here's another question: Where did Yueh get the tooth with the poison gas? The one that somehow wasn't detected by the Baron's poison snooper? The one that conveniently took Piter out of the picture? We aren't told. But surely this wasn't the sort of thing was left lying around the Ducal Residence for anyone to find. It is, however, just the sort of thing that the Master of Assassins of a Great House might be able to procure.

However he thought he came by the poison in the tooth, Yueh probably never guessed that its ultimate source was Hawat, who could have arranged that it was available at the right time.

This is how I think it went down:

The Emperor announces that House Atreides will be taking over the Arrakis fief from House Harkonnen. Everyone, including Hawat, sees that this is a trap. Hawat also foresees that the trap is likely to work: the Duke is too weak and Paul too young to escape it. Hawat must choose a side. He picks the side he thinks will win: the Harkonnens. With his assistance, their victory will be all but assured. He just has to arrange to be in the right place when the dust settles.

Piter wants Hawat to think that Jessica will betray the Duke. Very well, Hawat will pretend to be fooled. He tells the Atreides nothing, and does his best to turn the suspicions of Halleck and the others toward Jessica.

At the same time he turns the Harkonnens' plot to his advantage. Seeing it coming, he can avoid dying in the massacre. He provides Yueh with the chance to strike at the Baron and his close advisors. If Piter dies in the poison gas attack, as he does, his position will be ready for Hawat to fill; if not the position was going to be open soon anyway. Either way the Baron or his successor would be only too happy to have a replacement at hand.

(Hawat would probably have preferred that the Baron also be killed by the tooth, so that he could go to work for the impatient and naïve Feyd-Rautha instead of the devious old Baron. But it doesn't quite go his way.)

Having successfully made Yueh his patsy and set himself up to join the employ of the new masters of Arrakis and the spice, Hawat has some loose ends to tie up. Gurney Halleck has survived, and Jessica may also have survived. (“Do not count a human dead until you've seen his body.”) But Hawat is ready for this. Right from the beginning he has been assisting Piter in throwing suspicion on Jessica, with the idea that it will tend to prevent survivors of the massacre from reuniting under her leadership or Paul's. If Hawat is fortunate Gurney will kill Jessica, or vice versa, wrapping up another loose end.

Where Thufir Hawat goes, death and deceit follow.

Addendum

Maybe I should have mentioned that I have not read any of the sequels to Dune, so perhaps this is authoritatively contradicted — or confirmed in detail — in one of the many following books. I wouldn't know.

Planet Haskell: Mark Jason Dominus: Hawat! Hawat! Hawat! A million deaths are not enough for Hawat!

[ Content warning: Spoilers for Frank Herbert's novel Dune. Conversely none of this will make sense if you haven't read it. ]

Summary: Thufir Hawat is the real traitor. He set up Yueh to take the fall.

This blog post began when I wondered:

Hawat knows that Wellington Yueh has, or had a wife, Wanna. She isn't around. Hasn't he asked where she is?

In fact she is (or was) a prisoner of the Harkonnens and the key to Yueh's betrayal. If Hawat had asked the obvious question, he might have unraveled the whole plot.

But Hawat is a Mentat, and the Master of Assassins for a Great House. He doesn't make dumbass mistakes like forgetting to ask “what are the whereabouts of the long-absent wife of my boss's personal physician?”

The Harkonnens nearly succeed in killing Paul, by immuring an agent in the Atreides residence six weeks before Paul even moves in. Hawat is so humiliated by his failure to detect the agent hidden in the wall that he offers the Duke his resignation on the spot. This is not a guy who would have forgotten to investigate Yueh's family connections.

And that wall murder thing wasn't even the Harkonnens' real plan! It was just a distraction:

"We've arranged diversions at the Residency," Piter said. "There'll be an attempt on the life of the Atreides heir — an attempt which could succeed."

"Piter," the Baron rumbled, "you indicated —"

"I indicated accidents can happen," Piter said. "And the attempt must appear valid."

Piter de Vries was so sure that Hawat would find the agent in the wall, he was willing to risk spoiling everything just to try to distract Hawat from the real plan!

If Hawat was what he appeared to be, he would never have left open the question of Wanna's whereabouts. Where is she? Yueh claimed that she had been killed by the Harkonnens, and Jessica offers that as a reason that Yueh can be trusted.

But the Bene Gesserit have a saying: “Do not count a human dead until you've seen his body. And even then you can make a mistake.” The Mentats must have a similar saying. Wanna herself was Bene Gesserit, who are certainly human and notoriously difficult to kill. She was last known to be in the custody of the Harkonnens. Why didn't Hawat consider the possibility that Wanna might not be dead, but held hostage, perhaps to manipulate Duke Leto's physician and his heir's tutor — as in fact she was? Of course he did.

"Not to mention that his wife was a Bene Gesserit slain by the Harkonnens," Jessica said.

"So that’s what happened to her," Hawat said.

There's Hawat, pretending to be dumb.

Supposedly Hawat also trusted Yueh because he had received Imperial Conditioning, and as Piter says, “it's assumed that ultimate conditioning cannot be removed without killing the subject”. Hawat even says to Jessica: “He's conditioned by the High College. That I know for certain.”

Okay, and? Could it be that Thufir Hawat, Master of Assassins, didn't consider the possibility that the Imperial Conditioning could be broken or bent? Because Piter de Vries certainly did consider it, and he was correct. If Piter had plotted to subvert Imperial Conditioning to gain an advantage for his employer, surely Hawat would have considered the same.

Notice, also, what Hawat doesn't say to Jessica. He doesn't say that Yueh's Imperial Conditioning can be depended on, or that Yueh is trustworthy. Jessica does not have the gift of the full Truthsay, but it is safest to use the truth with her whenever possible. So Hawat misdirects Jessica by saying merely that he knows that Yueh has the Conditioning.

Yueh gave away many indications of his impending betrayal, which would have been apparent to Hawat. For example:

Paul read: […]

"Stop it!" Yueh barked.

Paul broke off, stared at him.

Yueh closed his eyes, fought to regain composure. […]

"Is something wrong?" Paul asked.

"I'm sorry," Yueh said. "That was … my … dead wife's favorite passage."

This is not subtle. Even Paul, partly trained, might well have detected Yueh's momentary hesitation before his lie about Wanna's death. Paul detects many more subtle signs in Yueh as well as in others:

"Will there be something on the Fremen?" Paul asked.

"The Fremen?" Yueh drummed his fingers on the table, caught Paul staring at the nervous motion, withdrew his hand.

Hawat the Mentat, trained for a lifetime in observing the minutiae of other people's behavior, and who saw Yueh daily, would surely have suspected something.

So, Hawat knew the Harkonnens’ plot: Wanna was their hostage, and they were hoping to subvert Yueh and turn him to treason. Hawat might already have known that the Imperial Conditioning was not a certain guarantee, but at the very least he could certainly see that the Harkonnens’ plan depended on subverting it. But he lets the betrayal go ahead. Why? What is Hawat's plan?

Look what he does after the attack on the Atreides. Is he killed in the attack, as so many others are? No, he survives and immediately runs off to work for House Harkonnen.

Hawat might have had difficulty finding a new job — “Say aren't you the Master of Assassins whose whole house was destroyed by their ancient enemies? Great, we'll be in touch if we need anyone fitting that description.” But Vladimir Harkonnen will be glad to have him, because he was planning to get rid of Piter and would soon need a new Mentat, as Hawat presumably knoew or guessed. And also, the Baron would enjoy having someone around to remind him of his victory over the Atreides, which Hawat also knows.

Here's another question: Where did Yueh get the tooth with the poison gas? The one that somehow wasn't detected by the Baron's poison snooper? The one that conveniently took Piter out of the picture? We aren't told. But surely this wasn't the sort of thing was left lying around the Ducal Residence for anyone to find. It is, however, just the sort of thing that the Master of Assassins of a Great House might be able to procure.

However he thought he came by the poison in the tooth, Yueh probably never guessed that its ultimate source was Hawat, who could have arranged that it was available at the right time.

This is how I think it went down:

The Emperor announces that House Atreides will be taking over the Arrakis fief from House Harkonnen. Everyone, including Hawat, sees that this is a trap. Hawat also foresees that the trap is likely to work: the Duke is too weak and Paul too young to escape it. Hawat must choose a side. He picks the side he thinks will win: the Harkonnens. With his assistance, their victory will be all but assured. He just has to arrange to be in the right place when the dust settles.

Piter wants Hawat to think that Jessica will betray the Duke. Very well, Hawat will pretend to be fooled. He tells the Atreides nothing, and does his best to turn the suspicions of Halleck and the others toward Jessica.

At the same time he turns the Harkonnens' plot to his advantage. Seeing it coming, he can avoid dying in the massacre. He provides Yueh with the chance to strike at the Baron and his close advisors. If Piter dies in the poison gas attack, as he does, his position will be ready for Hawat to fill; if not the position was going to be open soon anyway. Either way the Baron or his successor would be only too happy to have a replacement at hand.

(Hawat would probably have preferred that the Baron also be killed by the tooth, so that he could go to work for the impatient and naïve Feyd-Rautha instead of the devious old Baron. But it doesn't quite go his way.)

Having successfully made Yueh his patsy and set himself up to join the employ of the new masters of Arrakis and the spice, Hawat has some loose ends to tie up. Gurney Halleck has survived, and Jessica may also have survived. (“Do not count a human dead until you've seen his body.”) But Hawat is ready for this. Right from the beginning he has been assisting Piter in throwing suspicion on Jessica, with the idea that it will tend to prevent survivors of the massacre from reuniting under her leadership or Paul's. If Hawat is fortunate Gurney will kill Jessica, or vice versa, wrapping up another loose end.

Where Thufir Hawat goes, death and deceit follow.

Addendum

Maybe I should have mentioned that I have not read any of the sequels to Dune, so perhaps this is authoritatively contradicted — or confirmed in detail — in one of the many following books. I wouldn't know.

Hackaday: This Is How a Pen Changed the World

Look around you. Chances are, there’s a BiC Cristal ballpoint pen among your odds and ends. Since 1950, it has far outsold the Rubik’s Cube and even the iPhone, and yet, it’s one of the most unsung and overlooked pieces of technology ever invented. And weirdly, it hasn’t had the honor of trademark erosion like Xerox or Kleenex. When you ‘flick a Bic’, you’re using a lighter.

It’s probably hard to imagine writing with a feather and a bottle of ink, but that’s what writing was limited to for hundreds of years. When fountain pens first came along, they were revolutionary, albeit expensive and leaky. In 1900, the world literacy rate stood around 20%, and exorbitantly-priced, unreliable utensils weren’t helping.

In 1888, American inventor John Loud created the first ballpoint pen. It worked well on leather and wood and the like, but absolutely shredded paper, making it almost useless.

In 1888, American inventor John Loud created the first ballpoint pen. It worked well on leather and wood and the like, but absolutely shredded paper, making it almost useless.

One problem was that while the ball worked better than a nib, it had to be an absolutely perfect fit, or ink would either get stuck or leak out everywhere. Then along came László Bíró, who turned instead to the ink to solve the problems of the ballpoint.

Bíró’s ink was oil-based, and sat on top of the paper rather than seeping through the fibers. While gravity and pen angle had been a problem in previous designs, his ink induced capillary action in the pen, allowing it to write reliably from most angles. You’d think this is where the story ends, but no. Bíró charged quite a bit for his pens, which didn’t help the whole world literacy thing.

French businessman Marcel Bich became interested in Bíró’s creation and bought the patent rights for $2 million ($26M in 2024). This is where things get interesting, and when the ballpoint pen becomes incredibly cheap and ubiquitous. In addition to thicker ink, the secret is in precision-machined steel balls, which Marcel Bich was able to manufacture using Swiss watchmaking machinery. When released in 1950, the Bic Cristal cost just $2. Since this vital instrument has continued to be so affordable, world literacy is at 90% today.

When we wrote about the Cristal, we did our best to capture the essence of what about the pen makes continuous, dependable ink transmission possible, but the video below goes much further, with extremely detailed 3D models.

Thanks to both [George Graves] and [Stephen Walters] for the tip!

Saturday Morning Breakfast Cereal: Saturday Morning Breakfast Cereal - Foam

Click here to go see the bonus panel!

Hovertext:

The guy opens a coat to reveal respectable employment with opportunity for promotion.

Today's News:

MetaFilter: West Deutsche Rundfunk Big Band does Prince

It's Prince songs done by a big band jazz orchestra.

MetaFilter: A compendium of Signs and Portents

The Book of Miracles unfolds in chronological order divine wonders and horrors, from Noah's Ark and the Flood at the beginning to the fall of Babylon the Great Harlot at the end; in between this grand narrative of providence lavish pages illustrate meteorological events of the sixteenth century. In 123 folios with 23 inserts, each page fully illuminated, one astonishing, delicious, supersaturated picture follows another. Vivid with cobalt, aquamarine, verdigris, orpiment, and scarlet pigment, they depict numerous phantasmagoria: clouds of warriors and angels, showers of giant locusts, cities toppling in earthquakes, thunder and lightning. Against dense, richly painted backgrounds, the artist or artists' delicate brushwork touches in fleecy clouds and the fiery streaming tails of comets. There are monstrous births, plagues, fire and brimstone, stars falling from heaven, double suns, multiple rainbows, meteor showers, rains of blood, snow in summer. [...] Its existence was hitherto unknown, and silence wraps its discovery; apart from the attribution to Augsburg, little is certain about the possible workshop, or the patron for whom such a splendid sequence of pictures might have been created.The Augsburg Book of Miracles: a uniquely entrancing and enigmatic work of Renaissance art, available as a 13-minute video essay, a bound art book with hundreds of pages of trilingual commentary, or a snazzy Wikimedia slideshow of high-resolution scans.

Colossal: Lauren Fensterstock’s Cosmic Mosaics Map Out the Unknown in Crystal and Gems

“Beyond Mind” (2023), vintage crystal, glass, quartz, obsidian, tourmaline, and mixed media, 27 x 26 x 13 inches. Photo by Luc Demers. All images © Lauren Fensterstock, shared with permission

When a massive star dies, it collapses with an enormous explosion that produces a supernova. In some cases, the remains become a black hole, the enigmatic phenomenon that traps everything it comes into contact with—even light itself.

The life cycle of stars informs the most recent works by artist Lauren Fensterstock, who applies the principles of such stellar transformations to human interaction and connection. From her studio in Portland, Maine, she creates dense mosaics of fragmented crystals and stones including quartz, obsidian, and tourmaline that glimmer when hit by light and form shadowy areas of intrigue when not.

Cloaking sculptures and large-scale installations, Fensterstock’s dazzling compositions evoke natural forms like flowers, stars, and clouds and speak to cosmic and terrestrial entanglement. “I have to admit that I agonize over the placement of every single (piece),” the artist shares. “There are days where it flows together like a magical puzzle and other days where I place, rip out, and redo a square inch of surface again and again for hours. Even amidst a huge mass of material, every moment has to have that feeling of effortless perfection.”

“The totality of time lusters the dusk” (2020), mixed media, installation at The Renwick Gallery of the Smithsonian American Art Museum. Photo by Ron Blunt

The gems are sometimes firmly embedded within the surface and at others, appear to explode outward in an energetic eruption. Celestial implosions are apt metaphors for transformation, the artist says, and “pairs of stars speak to the complexities of personal connections… In the newest work—which explores vast sky maps filled with multiple constellations—I attempt to move beyond a single star or an isolated self to show the entanglement of the cosmic whole.”

While beautiful on their own, the precious materials explore broader themes in aggregate. Just as astrology uses constellations and cosmic machinations to offer insight and meaning into the unknown, Fensterstock’s jeweled sculptures chart relationships between the individual and the universe to draw closer to the divine.

The artist is currently working toward a solo show opening this fall at Claire Oliver Gallery in Harlem. Inspired by her daily meditation practice, she’ll present elaborately mapped creations of lotuses, black holes, fallen stars, and a bow and arrow that appear as offerings to the universe. In addition to that exhibition, the artist is showing in May at the Shelburne Museum and will attend a residency in Italy this September, to work on a book about entanglement and artist muses. Find more about those projects and her multi-faceted practice on her website and Instagram.

Detail of “Dwelling” (2023), vintage crystal, glass, quartz, and mixed media, 18 x 16 x 13 inches. Photo by Luc Demers

Left: “The Undiluted” (2023), vintage crystal, glass, quartz, obsidian, tourmaline, and mixed media. Photo by Luc Demers. Right: “The Unhurt” (2023), vintage crystal, glass, quartz, and mixed media, 22 x 27 x 14 inches. Photo by Luc Demers

“The totality of time lusters the dusk” (2020), mixed media, installation at The Renwick Gallery of the Smithsonian American Art Museum. Photo by Ron Blunt

Left: “The Many” (2023), vintage crystal, glass, quartz, and mixed media, 38 x 38 x 10 inches. Image courtesy of Claire Oliver Gallery. Right: “Heart of Negation” (2022), vintage crystal, glass, quartz, and mixed media, 54 × 54 × 14 inches

“Eclipse” (2022), vintage crystal, glass, quartz, obsidian, tourmaline, and mixed media. Photo by Luc Demers

“The Order of Things” (2016), shells and mixed media

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Lauren Fensterstock’s Cosmic Mosaics Map Out the Unknown in Crystal and Gems appeared first on Colossal.

Penny Arcade: Falling In

Even just on PC, the Fallout 4 "Next Gen" update has been pretty goof troop; those who owned it on GOG managed to pull the ripcord via its ability to rollback patches. It broke a bunch of mods, and it's important to note that while there are lots of mods that add content many of them just sand the edges off UI concerns or make the game easier and more fun to play. On Playstation, the whole affair gets stranger: it wasn't clear originally which versions of the game were entitled to the update, which I sorta just thought was free. I'm gonna paste a paragraph here from the IGN Article about it - try to parse this:

ScreenAnarchy: SHARDLAKE Review: Secrets and Fears in Not So Merry Old England

Arthur Hughes, Anthony Boyle, and Sean Bean star in the murder-mystery series, set in England's Tudor Era, premiering Wednesday, May 1, on Hulu, Star+, and Disney+.

Arthur Hughes, Anthony Boyle, and Sean Bean star in the murder-mystery series, set in England's Tudor Era, premiering Wednesday, May 1, on Hulu, Star+, and Disney+.

Greater Fool – Authored by Garth Turner – The Troubled Future of Real Estate: High anxiety

It’s May this week. Looks like Rutting Season 24 failed.

In a few days we’ll know the housing numbers, but in the bellweather markets of 416, 905 and 604 expect a fizzle. The April rate cut never came. The June chop is looking dodgy. And in the US, interest rates will fall between half a point …and zero… between now and Christmas.

Taxes went up in the budget. A fat wave of mortgage renewals is coming at rates which were supposed to be lower by now. Public sentiment has soured. House prices have not materially declined. Affordability is at a record low. Housing starts are going down, not up.

As we told you a few days ago, sales of new condos and detacheds have crashed and burned. Down 80%. Unsold inventory is stacking up. Precon buyers are defaulting in serious numbers, unable to close deals they signed two and three years ago. Over sixty developments containing 21,000 units in the GTA alone have been axed. On every level, government policy has been unable to deal with the real estate conundrum. So, soon, Canadians will likely change governments.

This week the US Fed will again leave rates on pause, and is likely to toughen up its language. More hawk talk. Rates may not move at all until the end of the year, given economic growth and persistently high prices (plus the explosively divisive American election in November). Bond yields on both sides of the border went up, and some economists are openly opining that CBs got their rate strategies wrong.

“I had favoured that view and remain of the belief that had the Fed not stopped at 5.5%, then we wouldn’t be faced with as pervasive inflation risk today,” says our economist pal Derek Holt. “Forecasting inflation is difficult, but inflation risk remained high and should have been more decisively snuffed out. To pause at 5.5% was a policy error in my view but now we have to live with it. That window has passed.”

Did you catch the latest Ipsos poll on Friday. Brutal. Canadians are pissed. It seems a prelude to trouble.

The survey found 80% feel owning a home s now “only for the rich.” That’s an increase of 11% in a year. The Zs believe this 90%. Mills are at 82%. Even the Boomers are there, at 78%. Almost three-quarters of people without a house have given up trying to get one. “You can see why the anxiety is so high,” says pollster Darrell Bricker, “because an increasing number of people believe they need to own a home, but fewer and fewer people believe that they can.” And 77% of respondents said the federal government had failed them. Correctly, they don’t believe political claims of massive house-building or falling prices.

A fifth of people are saving less for retirement. A third are depleting savings to pay bills. Most people now believe interest rates won’t be coming down. And when you put all of this together, it explains why the spring housing market has quickly faded into nothingness.

Well, none of this deters some people who are determined to buy. Like Bill and his squeeze.

“My wife and I are in our early 30s, renting an apartment in Toronto. We’ve been in our current place for a few years, and because of that, our rent is below market. We’re happy in this place, but it’s too small for a family, which we’re hoping to start this year.

“We’ve been looking to buy a home for a few years (for the usual reasons – more room, and safety from the infamous “family moving in eviction”), and have saved up enough for a 20% downpayment on a lower-end Toronto freehold home in a non-registered account (~$200,000). Our income is high, around $300,000 (slightly skewed towards my salary), but that would drop with only EI to cover my wife’s maternity leave. While there isn’t a whole lot of flexibility on timing for the purchase (hoping for within 2024), I’m trying to soak up as much information as I can to figure out when might be ideal – including daily reading of your blog!

“Your post on April 26th had the following ominous conclusion – do you have any advice for me?…Well, it’s a mess. Soon we will move into the next phase. More ugly coming. Stay liquid.”

Advice? Sure. Wait.

First, you do not need to rush into real estate because you might have a family this year. Babies don’t actually know much about deeds vs rental agreements. You have at least a couple of years to get this right. Second, buying in Toronto means even with $200k down you’ll end up with a mortgage of $1 million or more (maybe a lot more). Is that really he way you want to start out family life, especially with only EI during a mat leave? Why not wait until she’s back at work? Keep the stress in check.

Mostly, a lot has changed – as referenced above. The big rate cuts ain’t coming. The market may well start to correct as sellers accept the inevitable. The pool of buyers is shrinking. Realtor ranks are thinning fast. Politicians are on life support. And the potential for disruption spilling over from the States is palpable.

A real estate crash, especially in the Big Smoke, is unlikely. Too many people. Too few good listings. But DOM should lengthen. Months of inventory will grow. Sellers will get anxious and flexible. A buyer’s market. Your liquidity will become more powerful. You may end up owing less, or owning more.

And she will thank you.

About the picture: “Snapped by a family member,” writes Leslie, “whilst I was reading yesterday’s blog post aloud. I even showed them the photo of the wet dog but… didn’t pique their interest. They have a good home on year two of a 5 year fixed with occasional extra smack downs on the principal. No worries. One of them is Tarzan and the other one is Bear. (I will leave it with your astute readers to guess which is which.) They are snoozing. (Ya think?)”

To be in touch or send a picture of your beast, email to ‘garth@garth.ca’.

Schneier on Security: WhatsApp in India

Meta has threatened to pull WhatsApp out of India if the courts try to force it to break its end-to-end encryption.

Colossal: Yuko Nishikawa’s Sprawling Sculptures Mimic the Rambling Growth of Moss and Plants

All images © Yuko Nishikawa, shared with permission

For the last two years, Yuko Nishikawa (previously) has prioritized traveling. Chasing the unbridled inspiration that new environments bring to her practice, the Brooklyn-based artist has found herself in Japan, participating in residency programs and appreciating time on her own. Using local materials, crossing paths with people, and immersing herself in different landscapes has become the starting point for much of her recent work.

Nishikawa’s previous body of work incorporates more bulbous vessels, whereas the artist’s newest solo exhibition, Mossy Mossy, returns to the classic paper pod mobiles she’s known for and evokes a physical reflection of her musings from Hokuto-shi. Located in Yamanashi Prefecture, the city is replete with moss sprawling atop rocks, alongside waterfalls, and covering buildings. This simple plant “spreads from the center to the periphery and grows and increases,” she says. Methodically balanced by weight and connected by wire, Nishikawa suspends a plethora of green pods uniquely shaped from paper pulp.

Composed of more than 30 sculptures, all works in Mossy Mossy represent a system of growth that evokes the plants’ rambling qualities and always stem from a single, fixed line hanging from the ceiling. Delicate, dangling elements invite each mobile to respond to the movement of viewers and airflow. “Rather than looking at it from one point, the shape changes when you move your body to see and experience it from all directions,” she explains.

Mossy Mossy is on view now at Gasbon Metabolism until May 27, and Nishikawa is also preparing for an exhibition and lecture in October 2024 at Pollock Gallery. Follow on Instagram for updates, and see her website for more work.

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Yuko Nishikawa’s Sprawling Sculptures Mimic the Rambling Growth of Moss and Plants appeared first on Colossal.

new shelton wet/dry: artificial synapse

European authorities say they have rounded up a criminal gang who stole rare antique books worth €2.5 million from libraries across Europe. Books by Russian writers such as Pushkin and Gogol were substituted with valueless counterfeits

A cosmetic process known as a “vampire facial” is considered to be a more affordable and less invasive option than getting a facelift […] During a vampire facial, a person’s blood is drawn from their arm, and then platelets are separated out and applied to the patient’s face using microneedles […] three women who likely contracted HIV from receiving vampire facials at an unlicensed spa in New Mexico

Are women’s sexual preferences for men’s facial hair associated with their salivary testosterone during the menstrual cycle? […] participants selected the face they found most sexually attractive from pairs of composite images of the same men when fully bearded and when clean-shaven. The task was completed among the same participants during the follicular, peri-ovulatory (validated by the surge in luteinizing hormone) and luteal phases, during which participants also provided saliva samples for subsequent assaying of testosterone. […] We ran two models, both of which showed strong preferences among women for bearded over clean-shaven composite faces […] the main effect of cycle phase and the interaction between testosterone and cycle phase were not statistically significant

The effect of sound on physiology and development starts before birth, which is why a world that grows increasingly more noisy, with loud outdoor entertainment, construction, and traffic, is a concern. […] exposure of birds that are in the egg to moderate levels of noise can lead to developmental problems, amounting to increased mortality and reduced life-time reproductive success.

For the first time in at least a billion years, two lifeforms have merged into a single organism. The process, called primary endosymbiosis, has only happened twice in the history of the Earth, with the first time giving rise to all complex life as we know it through mitochondria. The second time that it happened saw the emergence of plants. Now, an international team of scientists have observed the evolutionary event happening between a species of algae commonly found in the ocean and a bacterium.

The man, who is referred to as “Mr. Blue Pentagon” after his favorite kind of LSD, gave researchers a detailed account of what he experienced when taking the drug during his music career in the 1970s. Mr. Pentagon was born blind. He did not perceive vision, with or without LSD. Instead, under the influence of psychedelics, he had strong auditory and tactile hallucinations, including an overlap of the two in a form of synesthesia.

In the 1979 murder trial of Dan White, his legal team seemed to attempt to blame his heinous actions on junk-food consumption. The press dubbed the tactic, the “Twinkie defense.” While no single crime can be blamed on diet, researchers have shown that providing inmates with healthy foods can reduce aggression, infractions, and anti-social behavior.

ScreenAnarchy: Sound And Vision: Jem Cohen

In the article series Sound and Vision we take a look at music videos from notable directors. This week we take a look at several music videos directed by Jem Cohen. Jem Cohen's style solidified almost from the get go. His hazy and haptic imagery, with a lot of textural grain lends a dreamlike quality to what otherwise is an observing documentary style. In his films there is some leeway to that style, easily flipping between fact and fiction, diary footage and essayist observations. Films like the masterpiece that is Museum Hours mix the three -documentary, fiction and essay film- into a hybrid blend. His films land upon certain truths, often by chance, sometimes by using earlier shot footage and recontextualizing them into a fictional...

In the article series Sound and Vision we take a look at music videos from notable directors. This week we take a look at several music videos directed by Jem Cohen. Jem Cohen's style solidified almost from the get go. His hazy and haptic imagery, with a lot of textural grain lends a dreamlike quality to what otherwise is an observing documentary style. In his films there is some leeway to that style, easily flipping between fact and fiction, diary footage and essayist observations. Films like the masterpiece that is Museum Hours mix the three -documentary, fiction and essay film- into a hybrid blend. His films land upon certain truths, often by chance, sometimes by using earlier shot footage and recontextualizing them into a fictional...

ScreenAnarchy: Trieste Science+Fiction Festival Wants Your Weird & Wonderful Films

Spring may have barely begun (at least in the Northern Hemisphere), but that means filmmakers are turning their thoughts to fall festivals. The autumn brings a deluge of genre festivals, but one of the standouts is Trieste Science+Fiction Festival. I had the pleasure to attend some years ago as a member of the jury, and I can attest not only to the beauty of the location, and the enthusiasm of the staff and volunteers, but the quality of the films in selectionl So if you have a science-fiction film, feature or short, for adults or for kids, send it their way. More details in the press release below. JOIN THE INTERGALACTIC HUB FOR SCI-FI LOVERS THE 24THE EDITION OF TRIESTE SCIENCE+FICTION FESTIVAL WILL RUN OCTOBER...

Spring may have barely begun (at least in the Northern Hemisphere), but that means filmmakers are turning their thoughts to fall festivals. The autumn brings a deluge of genre festivals, but one of the standouts is Trieste Science+Fiction Festival. I had the pleasure to attend some years ago as a member of the jury, and I can attest not only to the beauty of the location, and the enthusiasm of the staff and volunteers, but the quality of the films in selectionl So if you have a science-fiction film, feature or short, for adults or for kids, send it their way. More details in the press release below. JOIN THE INTERGALACTIC HUB FOR SCI-FI LOVERS THE 24THE EDITION OF TRIESTE SCIENCE+FICTION FESTIVAL WILL RUN OCTOBER...

Colossal: Dudi Ben Simon’s Playful Photos Draw on Visual Puns and Humourous Happenstance

All images © Dudi Ben Simon, shared with permission

When Dudi Ben Simon observes the world around her, visual puns and parallels are everywhere: a cinnamon bun stands in for a hair bun; the crinkled top of a lemon is cinched like a handbag; or yellow rubber glove stretches like melted cheese. “I see it as a type of readymade, a trend in art created by using objects or daily life items disconnected to their original context, changing their meanings and creating a new story from them,” the artist says. “I attempt to preserve the regular appearance of the items, but with a switch.”

Ben Simon also takes inspiration from the directness of advertising, focusing on a finely tuned, deceptively simple message that can both be read quickly and provoke humor or curiosity. “I truly believe in minimalism,” she says. “What is not required to tell the story does not exist.”

See more playful takes on everyday objects on Ben Simon’s Instagram. You might also enjoy Eric Kogan’s serendiptous street photography around New York City.

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Dudi Ben Simon’s Playful Photos Draw on Visual Puns and Humourous Happenstance appeared first on Colossal.

BOOOOOOOM! – CREATE * INSPIRE * COMMUNITY * ART * DESIGN * MUSIC * FILM * PHOTO * PROJECTS: Photographer Spotlight: Varvara Gorbunova

Varvara Gorbunova

Varvara Gorbunova’s Website

Varvara Gorbunova on Instagram

ScreenAnarchy: Arrow in May: Quarxx's PANDEMONIUM And Jennifer Reeder Selects

-thumb-200x200-93622.jpg) Part way through Spring and the folks at Arrow have a good lineup of films prepared for the month of May. Next month's programming is led by French horror flick, Pandemonium, which streams on the last week of the month. Jennifer Reeder, director of Perpetrator and Knives and Skin is this month's Selects honoree. Horror icons Tobe Hooper and Jack Hill both have films in the repertoire programming, which include early roles from the equally inconice Sid Haig and Robert Englund. Everything you need to know about May's lineup follows. ARROW Brings Pandemonium to their Streaming Service May 2024 Lineup Announced May 2024 Seasons: Jennifer Reeder Selects, Cunning Folk, The City that Never Sleeps, The Ick, Heaven or (Mostly) Hell ...

Part way through Spring and the folks at Arrow have a good lineup of films prepared for the month of May. Next month's programming is led by French horror flick, Pandemonium, which streams on the last week of the month. Jennifer Reeder, director of Perpetrator and Knives and Skin is this month's Selects honoree. Horror icons Tobe Hooper and Jack Hill both have films in the repertoire programming, which include early roles from the equally inconice Sid Haig and Robert Englund. Everything you need to know about May's lineup follows. ARROW Brings Pandemonium to their Streaming Service May 2024 Lineup Announced May 2024 Seasons: Jennifer Reeder Selects, Cunning Folk, The City that Never Sleeps, The Ick, Heaven or (Mostly) Hell ...

Ideas: Could resetting the body's clock help cure jet lag?

Canadian PhD graduate Kritika Vashishtha invented a new colour of light and combined it with artificial intelligence to fool the body into shifting time zones faster — creating a possible cure for jet lag. She tells IDEAS how this method could also help astronauts on Mars. *This episode is part of our series Ideas from the Trenches, which showcases fascinating new work by Canadian PhD students.

Michael Geist: The Law Bytes Podcast, Episode 201: Robert Diab on the Billion Dollar Lawsuits Launched By Ontario School Boards Against Social Media Giants

Concerns about the impact of social media on youth have been brewing for a long time, but in recent months a new battleground has emerged: the courts, who are home to lawsuits launched by school boards seeking billions in compensation and demands that the social media giants change their products to better protect kids. Those lawsuits have now come to Canada with four Ontario school boards recently filing claims.

Robert Diab is a professor of law at Thompson Rivers University in Kamloops, British Columbia. He writes about constitutional and human rights, as well as topics in law and technology. He joins the Law Bytes podcast to provide a comparison between the Canadian and US developments, a deep dive into alleged harms and legal arguments behind the claims, and an assessment of the likelihood of success.

The podcast can be downloaded here, accessed on YouTube, and is embedded below. Subscribe to the podcast via Apple Podcast, Google Play, Spotify or the RSS feed. Updates on the podcast on Twitter at @Lawbytespod.

Credits:

CP24, Four Ontario School Boards Suing Snapchat, TikTok and Meta for $4.5 Billion

The post The Law Bytes Podcast, Episode 201: Robert Diab on the Billion Dollar Lawsuits Launched By Ontario School Boards Against Social Media Giants appeared first on Michael Geist.

Open Culture: How Édouard Manet Became “the Father of Impressionism” with the Scandalous Panting, Le Déjeuner sur l’herbe (1863)

Édouard Manet’s Le Déjeuner sur l’herbe (1863) caused quite a stir when it made its public debut in 1863. Today, we might assume that the controversy surrounding the painting had to do with its containing a nude woman. But, in fact, it does not contain a nude woman — at least according to the analysis presented by gallerist-Youtuber James Payne in his new Great Art Explained video above. “The woman in this painting is not nude,” he explains. “She is naked.” Whereas “the nude is posed, perfect, idealized, the naked is just someone with no clothes on,” and, in this particular work, her faintly accusatory expression seems to be asking us, “What are you looking at?”

Here on Open Culture, we’ve previously featured Manet’s even more scandalous Olympia, which was first exhibited in 1865. In both that painting and Déjeuner, the woman is based on the same real person: Victorine Meurent, whom Manet used more frequently than any other model.

“A respected artist in her own right,” Meurent also “exhibited at the Paris Salon six times, and was inducted into the prestigious Société des Artistes Français in 1903.” That she got on that path after a working-class upbringing “shows a fortitude of mind and a strength of character that Manet needed for Déjeuner.” But whatever personality she exuded, her non-idealized nudity, or rather nakedness, couldn’t have changed art by itself.

Manet gave Meurent’s exposed body an artistic context, and a maximally provocative one at that, by putting it on a large canvas “normally reserved for historical, religious, and mythological subjects” and making choices — the visible brushstrokes, the stage-like background, the obvious classical allusions in a clearly modern setting — that deliberately emphasize “the artificial construction of the painting, and painting in general.” What underscores all this, of course, is that the men sitting with her all have their highly eighteen-sixties-looking clothes on. Manet may have changed the rules, opening the door for Impressionism, but he still reminds us how much of art’s power, whatever the period or movement, comes from sheer contrast.

Related Content:

The Scandalous Painting That Helped Create Modern Art: An Introduction to Édouard Manet’s Olympia

The Museum of Modern Art (MoMA) Puts Online 90,000 Works of Modern Art

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities, the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.

Open Culture: Bukowski Reads Bukowski: Watch a 1975 Documentary Featuring Charles Bukowski at the Height of His Powers

In 1973, Richard Davies directed Bukowski, a documentary that TV Guide described as a “cinema-verite portrait of Los Angeles poet Charles Bukowski.” The film finds Bukowski, then 53 years old, “enjoying his first major success,” and “the camera captures his reminiscences … as he walks around his Los Angeles neighborhood. Blunt language and a sly appreciation of his life form the core of the program, which includes observations by and about the women in his life.”

The original film clocked in at 46 minutes. Then, two years later, PBS released a “heavily-edited 28-minute version of the film,” using alternate scenes and a rearranged structure. Renamed Bukowski Reads Bukowski, the film aired on Thursday, October 16, 1975. And, true to its name, the film features footage of Bukowski reading his poems, starting with “The Rat,” from the 1972 collection Mockingbird Wish Me Luck. You can watch Bukowski Reads Bukowski above, and find more Bukowski readings in the Relateds below.

Related Content

Hear 130 Minutes of Charles Bukowski’s First-Ever Recorded Readings (1968)

Charles Bukowski Reads His Poem “The Secret of My Endurance

Tom Waits Reads Charles Bukowski

Four Charles Bukowski Poems Animated

Penny Arcade: Falling In

Disquiet: Where and How I Listen

I have two very small office areas: one at home and one that I rent nearby. Neither has a proper stereo system.

The home office has a small modular synth setup next to my desk. For space-management reasons the speakers (monitors, actually, in music-equipment speak) sit perpendicular to my desk, above the synth. There I usually listen to music on my laptop speakers or headphones. My laptop, a MacBook Pro 14″ (the M1, which is somehow several generations behind but feels quite peppy and looks brand new), has fantastic built-in speakers, but when I really want to listen to something, I walk into the living room, which has proper speakers connected to what once was a proper stereo system and now inspires people point and stare and ask what the heck those big things are beneath the television and why don’t I just have a Bluetooth something or other. I have a Plex system running on a Mac Mini attached to the home stereo, so I can easily collate my digital music files (notably: inbound material I’m considering for review), listen to them in the living room, and access them elsewhere with my phone, iPad, or laptop.

The rental office is self-enclosed but in a shared building with an active hallway, so I only listen to music there on headphones and earbuds, so as not to bug anyone. My main extravagance is I bought a second guitar when I got the rental office, so I can be a terrible guitarist in two places rather than just one, and to avoid looking like an oddly clean-cut itinerant musician were I to walk back and forth with the guitar between home and office regularly.

That is where and how I listen.

Schneier on Security: Whale Song Code

During the Cold War, the US Navy tried to make a secret code out of whale song.

The basic plan was to develop coded messages from recordings of whales, dolphins, sea lions, and seals. The submarine would broadcast the noises and a computer—the Combo Signal Recognizer (CSR)—would detect the specific patterns and decode them on the other end. In theory, this idea was relatively simple. As work progressed, the Navy found a number of complicated problems to overcome, the bulk of which centered on the authenticity of the code itself.

The message structure couldn’t just substitute the moaning of a whale or a crying seal for As and Bs or even whole words. In addition, the sounds Navy technicians recorded between 1959 and 1965 all had natural background noise. With the technology available, it would have been hard to scrub that out. Repeated blasts of the same sounds with identical extra noise would stand out to even untrained sonar operators.

In the end, it didn’t work.

Daniel Lemire's blog: Careful with Pair-of-Registers instructions on Apple Silicon

Egor Bogatov is an engineer working on C# compiler technology at Microsoft. He had an intriguing remark about a performance regression on Apple hardware following what appears to be an optimization. The .NET 9.0 runtime introduced the optimization where two loads (ldr) could be combined into a single load (ldp). It is a typical peephole optimization. Yet it made things much slower in some cases.

Under ARM, the ldr instruction is used to load a single value from memory into a register. It operates on a single register at a time. Its assembly syntax is straightforward ldr Rd, [Rn, #offset]. The ldp instruction (Load Pair of Registers) loads two consecutive values from memory into two registers simultaneously. Its assembly syntax is similar but there are two destination registers: ldp Rd1, Rd2, [Rn, #offset]. The ldp instruction loads two 32-bit words or two 64-bit words from memory, and writes them to two registers.

Given a choice, it seems that you should prefer the ldp instruction. After all, it is a single instruction. But there is a catch on Apple silicon: if you are loading data from a memory that was just written to, there might be a significant penalty to ldp.

To illustrate, let us consider the case where we write and load two values repeatedly using two loads and two stores:

for (int i = 0; i < 1000000000; i++) { int tmp1, tmp2; __asm__ volatile("ldr %w0, [%2]\n" "ldr %w1, [%2, #4]\n" "str %w0, [%2]\n" "str %w1, [%2, #4]\n" : "=&r"(tmp1), "=&r"(tmp2) : "r"(ptr):); }

Next, let us consider an optimized approach where we combine the two loads into a single one:

for (int i = 0; i < 1000000000; i++) { int tmp1, tmp2; __asm__ volatile("ldp %w0, %w1, [%2]\n" "str %w0, [%2]\n" "str %w1, [%2, #4]\n" : "=&r"(tmp1), "=&r"(tmp2) : "r"(ptr) :); }

It would be surprising if this new version was slower, but it can be. The code for the benchmark is available. I benchmarked both on AWS using Amazon’s graviton 3 processors, and on Apple M2. Your results will vary.

| function | graviton 3 | Apple M2 |

|---|---|---|

| 2 loads, 2 stores | 2.2 ms/loop | 0.68 ms/loop |

| 1 load, 2 stores | 1.6 ms/loop | 1.6 ms/loop |

I have no particular insight as to why it might be, but my guess is that Apple Silicon has a Store-to-Load forwarding optimization that does not work with Pair-Of-Registers loads and stores.

There is an Apple Silicon CPU Optimization Guide which might provide better insight.

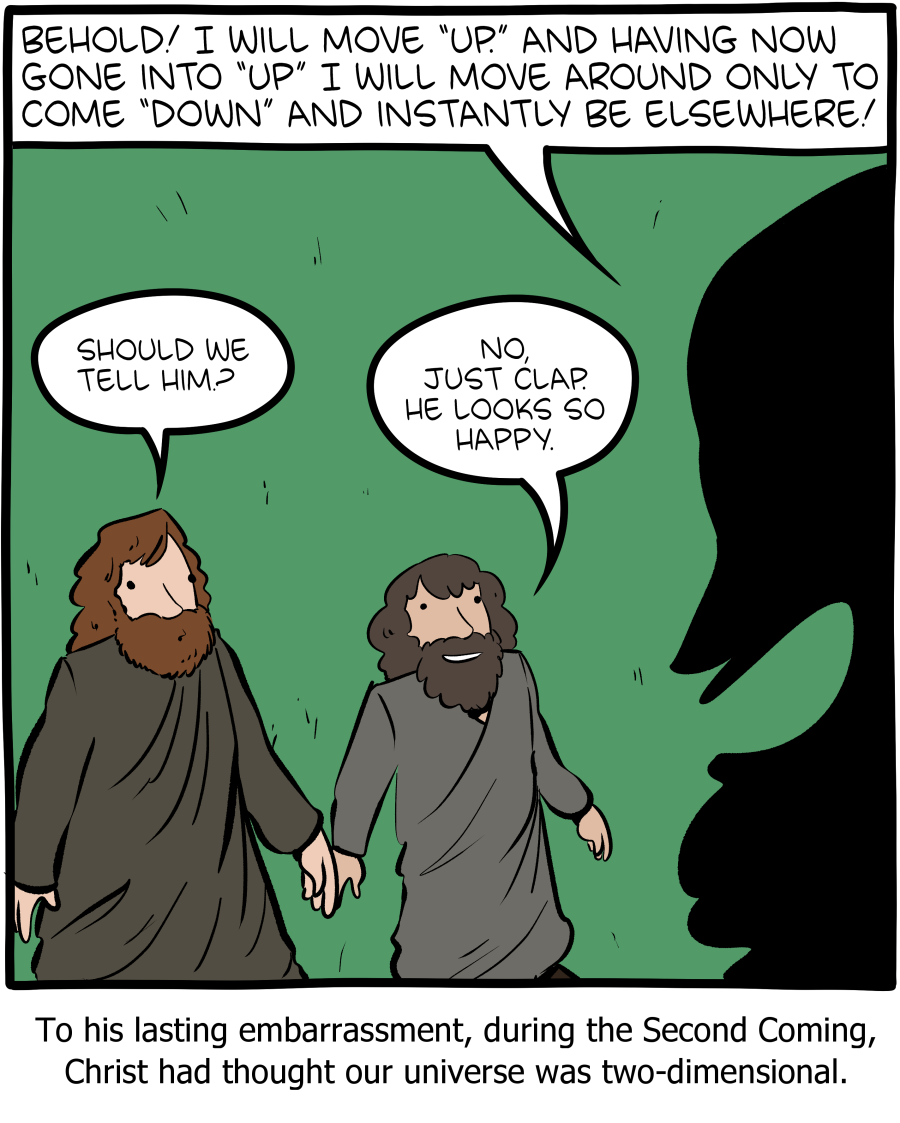

Saturday Morning Breakfast Cereal: Saturday Morning Breakfast Cereal - Up

Click here to go see the bonus panel!

Hovertext:

I wonder how many miracles get boring if you just grant god an extra dimension?

Today's News:

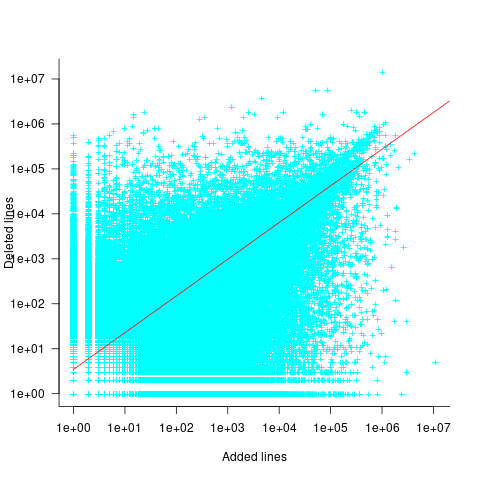

The Shape of Code: Chinchilla Scaling: A replication using the pdf

The paper Chinchilla Scaling: A replication attempt by Besiroglu, Erdil, Barnett, and You caught my attention. Not only a replication, but on the first page there is the enticing heading of section 2, “Extracting data from Hoffmann et al.’s Figure 4”. Long time readers will know of my interest in extracting data from pdfs and images.

This replication found errors in the original analysis, and I, in turn, found errors in the replication’s data extraction.

Besiroglu et al extracted data from a plot by first converting the pdf to Scalable Vector Graphic (SVG) format, and then processing the SVG file. A quick look at their python code suggested that the process was simpler than extracting directly from an uncompressed pdf file.

Accessing the data in the plot is only possible because the original image was created as a pdf, which contains information on the coordinates of all elements within the plot, not as a png or jpeg (which contain information about the colors appearing at each point in the image).

I experimented with this pdf-> svg -> csv route and quickly concluded that Besiroglu et al got lucky. The output from tools used to read-pdf/write-svg appears visually the same, however, internally the structure of the svg tags is different from the structure of the original pdf. I found that the original pdf was usually easier to process on a line by line basis. Besiroglu et al were lucky in that the svg they generated was easy to process. I suspect that the authors did not realize that pdf files need to be decompressed for the internal operations to be visible in an editor.

I decided to replicate the data extraction process using the original pdf as my source, not an extracted svg image. The original plots are below, and I extracted Model size/Training size for each of the points in the left plot (code+data):

What makes this replication and data interesting?

Chinchilla is a family of large language models, and this paper aimed to replicate an experimental study of the optimal model size and number of tokens for training a transformer language model within a specified compute budget. Given the many millions of £/$ being spent on training models, there is a lot of interest in being able to estimate the optimal training regimes.

The loss model fitted by Besiroglu et al, to the data they extracted, was a little different from the model fitted in the original paper:

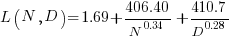

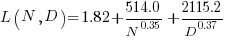

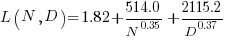

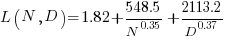

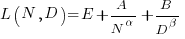

Original:

Replication:

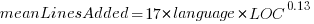

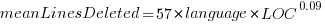

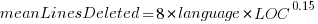

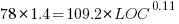

where:  is the number of model parameters, and

is the number of model parameters, and  is the number of training tokens.

is the number of training tokens.

If data extracted from the pdf is different in some way, then the replication model will need to be refitted.

The internal pdf operations specify the x/y coordinates of each colored circle within a defined rectangle. For this plot, the bottom left/top right coordinates of the rectangle are: (83.85625, 72.565625), (421.1918175642, 340.96202) respectively, as specified in the first line of the extracted pdf operations below. The three values before each rg operation specify the RGB color used to fill the circle (for some reason duplicated by the plotting tool), and on the next line the /P0 Do is essentially a function call to operations specified elsewhere (it draws a circle), the six function parameters precede the call, with the last two being the x/y coordinates (e.g., x=154.0359138125, y=299.7658568695), and on subsequent calls the x/y values are relative to the current circle coordinates (e.g., x=-2.4321790463 y=-34.8834544196).

Q Q q 83.85625 72.565625 421.1918175642 340.96202 re W n 0.98137749

0.92061729 0.86536915 rg 0 G 0.98137749 0.92061729 0.86536915 rg

1 0 0 1 154.0359138125 299.7658568695 cm /P0 Do

0.97071849 0.82151775 0.71987163 rg 0.97071849 0.82151775 0.71987163 rg

1 0 0 1 -2.4321790463 -34.8834544196 cm /P0 Do

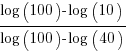

The internal pdf x/y values need to be mapped to the values appearing on the visible plot’s x/y axis. The values listed along a plot axis are usually accompanied by tick marks, and the pdf operation to draw these tick marks will contain x/y values that can be used to map internal pdf coordinates to visible plot coordinates.