Recent CPAN uploads - MetaCPAN: DateTime-Format-Genealogy-0.06

Create a DateTime object from a Genealogy Date

Changes for 0.06 - 2024-04-23T08:28:40Z

- Handle entries which have the French 'Mai' instead of the English 'May' Some messages were printed even in quiet mode Handle '1517-05-04' as '04/05/1517'

OCaml Weekly News: OCaml Weekly News, 23 Apr 2024

- A second beta for OCaml 5.2.0

- An implementation of purely functional double-ended queues

- Feedback / Help Wanted: Upcoming OCaml.org Cookbook Feature

- Picos — Interoperable effects based concurrency

- Ppxlib dev meetings

- Ortac 0.2.0

- OUPS meetup april 2024

- Mirage 4.5.0 released

- patricia-tree 0.9.0 - library for patricia tree based maps and sets

- OCANNL 0.3.1: a from-scratch deep learning (i.e. dense tensor optimization) framework

- Other OCaml News

Recent additions: bearriver 0.14.8

FRP Yampa replacement implemented with Monadic Stream Functions.

Recent additions: dunai-test 0.12.3

Testing library for Dunai

Recent additions: dunai 0.12.3

Generalised reactive framework supporting classic, arrowized and monadic FRP.

Recent CPAN uploads - MetaCPAN: Error-Show-v0.4.0

Show context around syntax errors and exceptions

Changes for v0.4.0 - 2024-04-23

- fixes

- new features

- improvements

- other

Hackaday: Your Smart TV Does 4K, Surround Sound, Denial-of-service…

Any reader who has bought a TV in recent years will know that it’s now almost impossible to buy one that’s just a TV. Instead they are all “smart” TVs, with an on-board computer running a custom OS with a pile of streaming apps installed. It fits an age in which linear broadcast TV is looking increasingly archaic, but it brings with it a host of new challenges.

Normally you’d expect us to launch into a story of privacy invasion from a TV manufacturer at this point, but instead we’ve got [Priscilla]’s experience, in which her HiSense Android TV executed a denial of service on the computers on her network.

The root of the problem appears to be the TV running continuous network discovery attempts using random UUIDs, which when happening every few minutes for a year or more, overloads the key caches on other networked machines. The PC which brought the problem to light was a Windows machine, which leaves us sincerely hoping that our Linux boxen might be immune.

It’s fair to place this story more under the heading of bugs than of malicious intent, but even so it’s something that should never have made it to production. The linked story advises nobody to buy a HiSense TV, but to that we’d have to doubt that other manufactures wouldn’t be similarly affected.

Header: William Hook, CC-BY-SA 2.0.

Thanks [Concretedog] for the tip.

Recent additions: haskoin-store 1.5.1

Storage and index for Bitcoin and Bitcoin Cash

Recent CPAN uploads - MetaCPAN: XS-Parse-Keyword-0.40

XS functions to assist in parsing keyword syntax

Changes for 0.40 - 2024-04-23

- CHANGES

- BUGFIXES

Recent CPAN uploads - MetaCPAN: Syntax-Operator-Zip-0.10

infix operator to compose two lists together

Changes for 0.10 - 2024-04-23

- CHANGES

Recent additions: hspec-meta 2.11.8

A version of Hspec which is used to test Hspec itself

Slashdot: NASA Officially Greenlights $3.35 Billion Mission To Saturn's Moon Titan

Read more of this story at Slashdot.

Recent CPAN uploads - MetaCPAN: App-cat-v-0.9903

cat-v command implementation

Changes for 0.9903 - 2024-04-23T09:23:56Z

- change default tabstyle name to "needle"

Open Culture: A Guided Tour of the Largest Handmade Model of Imperial Rome: Discover the 20x20 Meter Model Created During the 1930s

At the moment, you can’t see the largest, most detailed handmade model of Imperial Rome for yourself. That’s because the Museo della Civiltà Romana, the institution that houses it, has been closed for renovations since 2014. But you can get a guided tour of “Il Plastico,” as this grand Rome-in-miniature is known, through the new Ancient Rome Live video above. “The archaeologist and architect Italo Gismondi created this amazing model,” explains host Darius Arya, previously featured here on Open Culture for his tour of Pompeii. Working at a 1:250 scale, Gismondi built most of Il Plastico between 1933 and 1937, with later expansions after its installation in the Museo della Civiltà Romana.

Archaeologists and other scholars have, of course, learned more about the Eternal City over the past nine decades, knowledge reflected in regularly updated digital models like Rome Reborn. But none have showed Gismondi’s dedication to painstaking manual labor, which allowed him to craft practically every then-known architectural and infrastructural feature within the walls of Rome in the Constantinian age, from 306 to 337 AD.

Arya points out recognizable landmarks like the Colosseum, the Forum, and the Pyramid of Cestius as well as bridges, river fortifications, aqueducts, and even landscaping details down to the level of individual trees.

Even when the camera zooms way in, Gismondi’s Rome looks practically habitable (and indeed, it may appeal to some viewers more than do the modern European cities that are its descendants). It’s no wonder that Ridley Scott, a director famously sensitive to visual impact, would use the model in Gladiator. And while a video tour like Arya’s provides a closer-up view of many sections of Il Plastico than one can get in person, the only way to fully appreciate the sheer scale of the achievement is to behold its physical reality. Luckily, you should be able to do just that next year, when the Museo della Civiltà Romana is scheduled to reopen at long last. But then, no more could Rome be built in a day than its museum could be renovated in a mere decade.

Related content:

A Huge Scale Model Showing Ancient Rome at Its Architectural Peak (Built Between 1933 and 1937)

Rome Reborn: A New 3D Virtual Model Lets You Fly Over the Great Monuments of Ancient Rome

Interactive Map Lets You Take a Literary Journey Through the Historic Monuments of Rome

Ancient Rome’s System of Roads Visualized in the Style of Modern Subway Maps

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities, the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.

MetaFilter: It's peculiar, in the sense that words are supposed to mean something

MetaFilter: How social networks prey on our longing to be known

" An up close and personal look into why we should be extremely careful when sharing about ourselves online, no matter how shiny an app or network might be." Jan Maarten writes about how the urge to reveal is exploited by each new service we engage with.

Open Culture: Watch Iconic Artists at Work: Rare Videos of Picasso, Matisse, Kandinsky, Renoir, Monet, Pollock & More

Claude Monet, 1915:

We’ve all seen their works in fixed form, enshrined in museums and printed in books. But there’s something special about watching a great artist at work. Over the years, we’ve posted film clips of some of the greatest artists of the 20th century caught in the act of creation. Today we’ve gathered together eight of our all-time favorites.

Above is the only known film footage of the French Impressionist Claude Monet, made when he was 74 years old, painting alongside a lily pond in his garden at Giverny. The footage was shot in the summer of 1915 by the French actor and dramatist Sacha Guitry for his patriotic World War I‑era film, Ceux de Chez Nous, or “Those of Our Land.” For more information, see our previous post, “Rare Film: Claude Monet at Work in His Famous Garden at Giverny, 1915.”

Pierre-Auguste Renoir, 1915:

You may never look at a painting by the French Impressionist Pierre-Auguste Renoir in quite the same way after seeing the footage above, which is also from Sacha Guitry’s Ceux de Chez Nous. Renoir suffered from severe rheumatoid arthritis during the last decades of his life. By the time this film was made in June of 1915, the 74-year-old Renoir was physically deformed and in constant pain. The painter’s 14-year-old son Claude is shown placing the brush in his father’s permanently clenched hand. To learn more about the footage and about Renoir’s terrible struggle with arthritis, be sure to read our post, “Astonishing Film of Arthritic Impressionist Painter, Pierre-Auguste Renoir (1915).”

Auguste Rodin, 1915:

The footage above, again by Sacha Guitry, shows the French sculptor Auguste Rodin in several locations, including his studio at the dilapidated Hôtel Biron in Paris, which later became the Musée Rodin. The film was made in late 1915, when Rodin was 74 years old. For more on Rodin and the Hôtel Biron, please see: “Rare Film of Sculptor Auguste Rodin working at his Studio in Paris (1915).”

Wassily Kandinsky, 1926:

In 1926, filmmaker Hans Cürlis took the rare footage above of the Russian abstract painter Wassily Kandinsky applying paint to a blank canvas at the Galerie Neumann-Nierendorf in Berlin. Kandinsky was about 49 years old at the time, and teaching at the Bauhaus. To learn more about Kandinsky and to watch a video of actress Helen Mirren discussing his work at the Museum of Modern Art in New York, see our post, “The Inner Object: Seeing Kandinsky.”

Henri Matisse, 1946:

The French artist Henri Matisse is shown above when he was 76 years old, making a charcoal sketch of his grandson, Gerard, at his home and studio in Nice. The clip is from a 26-minute film made by François Campaux for the French Department of Cultural Relations. To read a translation of Matisse’s spoken words and to watch a clip of the artist working on one of his distinctive paper cut-outs, go to “Vintage Film: Watch Henri Matisse Sketch and Make His Famous Cut-Outs (1946).”

Pablo Picasso, 1950:

In the famous footage above, Spanish artist Pablo Picasso paints on glass at his studio in the village of Vallauris, on the French Riviera. It’s from the 1950 film Visite à Picasso (A Visit with Picasso) by Belgian filmmaker Paul Haesaerts. Picasso was about 68 years old at the time. You can find the full 19-minute film here.

Jackson Pollock, 1951:

In the short film above, called Jackson Pollock 51, the American abstract painter talks about his work and creates one of his distinctive drip paintings before our eyes. The film was made by Hans Namuth when Pollock was 39 years old. To learn about Pollock and his fateful collaboration with Namuth, see “Jackson Pollock: Lights, Camera, Paint! (1951).”

Alberto Giacometti, 1965:

The Swiss artist Alberto Giacometti is most famous for his thin, elongated sculptures of the human form. But in the clip above from the 1966 film Alberto Giacometti by the Swiss photographer Ernst Scheidegger, Giacometti is shown working in another medium as he paints the foundational lines of a portrait at his studio in Paris. The footage was apparently shot in 1965, when Giacometti was about 64 years old and had less than a year to live. To learn about Giacometti’s approach to drawing and to read a translation of the German narration in this clip, be sure to see our post, “Watch as Alberto Giacometti Paints and Pursues the Elusive ‘Apparition,’ (1965).”

Related Content:

1922 Photo: Claude Monet Stands on the Japanese Footbridge He Painted Through the Years

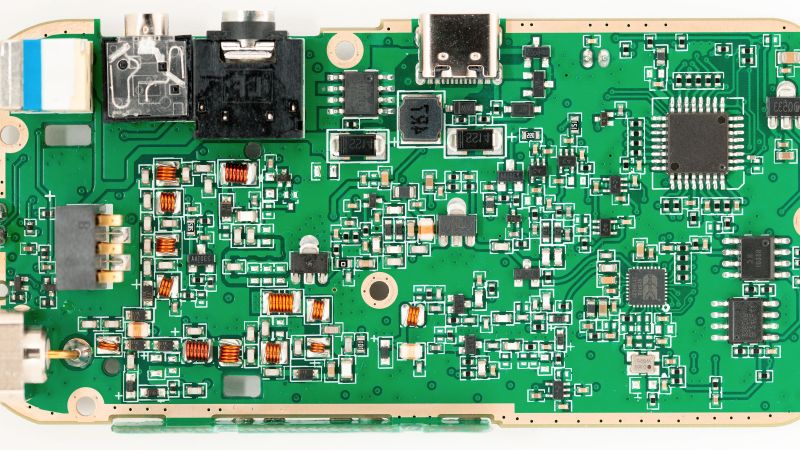

Hackaday: Reverse Engineering the Quansheng Hardware

In the world of cheap amateur radio transceivers, the Quansheng UV-K5 can’t be beaten for hackability. But pretty much every hack we’ve seen so far focuses on the firmware. What about the hardware?

To answer that question, [mentalDetector] enlisted the help of a few compatriots and vivisected a UV-K5 to find out what makes it tick. The result is a (nearly) complete hardware description of the radio, including schematics, PCB design files, and 3D renders. The radio was a malfunctioning unit that was donated by collaborator [Manuel], who desoldered all the components and measured which ones he could to determine specific values. The parts that resisted his investigations got bundled up along with the stripped PCB to [mentalDetector], who used a NanoVNA to characterize them as well as possible. Documentation was up to collaborator [Ludwich], who also made tweaks to the schematic as it developed.

PCB reverse engineering was pretty intense. The front and back of the PCB — rev 1.4, for those playing along at home — were carefully photographed before getting the sandpaper treatment to reveal the inner two layers. The result was a series of high-resolution photos that were aligned to show which traces connected to which components or vias, which led to the finished schematics.

There are still a few unknown components, mostly capacitors by the look of it, but the bulk of the work has been done, and hats off to the team for that. This should make hardware hacks on the radio much easier, and we’re looking forward to what’ll come from this effort. If you want to check out some of the firmware exploits that have already been accomplished on this radio, check out the Trojan Pong upgrade, or the possibilities of band expansion. We’ve also seen a mixed hardware-firmware upgrade that really shines.

Slashdot: Voyager 1 Resumes Sending Updates To Earth

Read more of this story at Slashdot.

The Universe of Discourse: R.I.P. Oddbins

I've just learned that Oddbins, a British chain of discount wine and liquor stores, went out of business last year. I was in an Oddbins exactly once, but I feel warmly toward them and I was sorry to hear of their passing.

In February of 2001 I went into the Oddbins on Canary Wharf and asked for bourbon. I wasn't sure whether they would even sell it. But they did, and the counter guy recommended I buy Woodford Reserve. I had not heard of Woodford before but I took his advice, and it immediately became my favorite bourbon. It still is.

I don't know why I was trying to buy bourbon in London. Possibly it was pure jingoism. If so, the Oddbins guy showed me up.

Thank you, Oddbins guy.

Planet Haskell: Mark Jason Dominus: R.I.P. Oddbins

I've just learned that Oddbins, a British chain of discount wine and liquor stores, went out of business last year. I was in an Oddbins exactly once, but I feel warmly toward them and I was sorry to hear of their passing.

In February of 2001 I went into the Oddbins on Canary Wharf and asked for bourbon. I wasn't sure whether they would even sell it. But they did, and the counter guy recommended I buy Woodford Reserve. I had not heard of Woodford before but I took his advice, and it immediately became my favorite bourbon. It still is.

I don't know why I was trying to buy bourbon in London. Possibly it was pure jingoism. If so, the Oddbins guy showed me up.

Thank you, Oddbins guy.

Disquiet: White Van, Whiteboard

This old white van is something of a neighborhood white board. It gets written over, and then it’s painted over, and then the circle of urban life begins anew.

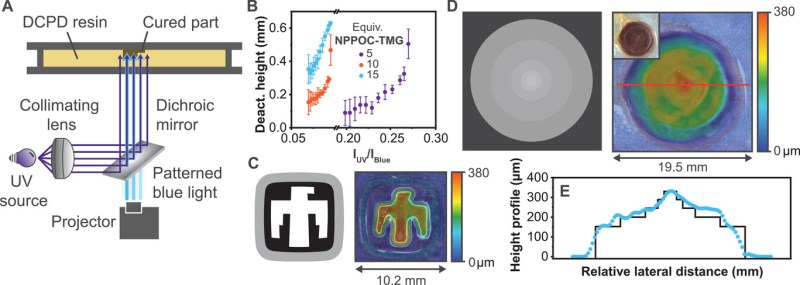

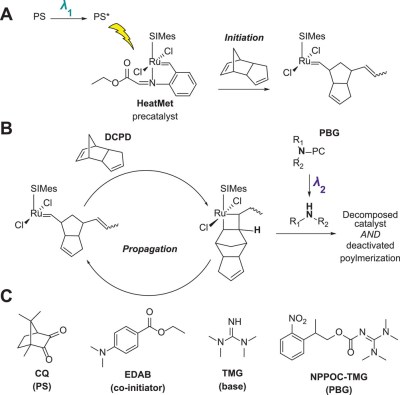

Hackaday: Dual-Wavelength SLA 3D Printing: Fast Continuous Printing With ROMP And FRP Resins

As widespread as 3D printing with stereolithography (SLA) is in the consumer market, these additive manufacturing (AM) machines are limited to a single UV light source and the polymerization of free-radical polymerization (FRP) resins. The effect is that the object is printed in layers, with each layer adhering not only to the previous layer, but also the transparent (FEP or similar) film at the bottom of the resin vat. The resulting peeling of the layer from the film both necessitates a pause in the printing process, but also puts significant stress on the part being printed. Over the years a few solutions have been developed, with Sandia National Laboratories’ SWOMP technology (PR version) being among the latest.

Unlike the more common FRP-based SLA resins, SWOMP (Selective Dual-Wavelength Olefin Metathesis 3D-Printing) uses ring-opening metathesis polymerization (ROMP), which itself has been commercialized since the 1970s, but was not previously used with photopolymerization in this fashion. For the monomer dicyclopentadiene (DCPD) was chosen, with HeatMet (HM) as the photo-active olefin metathesis catalyst. This enables the UV-sensitivity, with an added photobase generator (PBG) which can be used to selectively deactivate polymerization.

The advantage of DCPD is that this material and the resulting objects are significantly robust and are commonly thermally post-cured (250 °C for 30 seconds for the dogbones in this experiment) to gain their full mechanical properties. Meanwhile the same dual-wavelength setup is used for continuous SLA printing as previously covered by e.g. [Martin P. de Beers] and colleagues in a 2019 paper in Science Advances. Not only does the photoinhibitor with FRP and ROMP resins prevent the attachment of polymerized resin onto the transparent film or window, due to the localized control of the photoinhibitation depth dual-wavelength SLA is not limited to single layers, but can print entire topological features in a single pass.

This method might therefore be better than both existing FRP-based mono-wavelength SLA, and the proprietary CLIP technology by Carbon with its oxygen-permeable membrane, with no peeling and with print speeds of many times that of conventional SLA. Currently Sandia is looking for partners to develop and commercialize this technology, raising the hope that such dual-wavelength SLA printers may make it onto the market by manufacturers which do not require a security clearance and/or proof of financial liquidity before you even get to talk to a salesperson.

Slashdot: California Is Grappling With a Growing Problem: Too Much Solar

Read more of this story at Slashdot.

MetaFilter: No One Buys Books

Q. Do you know approximately how many authors there are across the industry with 500,000 units or more during this four-year period? A. My understanding is that it was about 50. Q. 50 authors across the publishing industry who during this four-year period sold more than 500,000 units in a single year? A. Yes. — Madeline Mcintosh, CEO, Penguin Random House USThe publishing industry is supported, it turns out, primarily by outlier smash hits, the Dan Browns of the world, and by the continued sale of reliable titles on the backlist, like the Lord of the Rings. Advances to new authors function almost like venture capital, resulting in a loss for the large majority, based on the hope that a small minority will become runaway best sellers, subsidizing the rest.

Schneier on Security: Microsoft and Security Incentives

Former senior White House cyber policy director A. J. Grotto talks about the economic incentives for companies to improve their security—in particular, Microsoft:

Grotto told us Microsoft had to be “dragged kicking and screaming” to provide logging capabilities to the government by default, and given the fact the mega-corp banked around $20 billion in revenue from security services last year, the concession was minimal at best.

[…]

“The government needs to focus on encouraging and catalyzing competition,” Grotto said. He believes it also needs to publicly scrutinize Microsoft and make sure everyone knows when it messes up.

“At the end of the day, Microsoft, any company, is going to respond most directly to market incentives,” Grotto told us. “Unless this scrutiny generates changed behavior among its customers who might want to look elsewhere, then the incentives for Microsoft to change are not going to be as strong as they should be.”

Breaking up the tech monopolies is one of the best things we can do for cybersecurity.

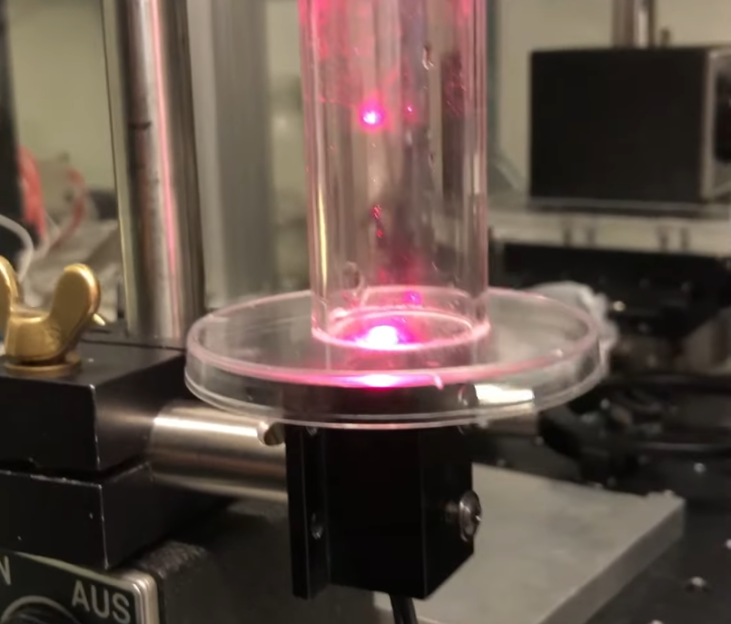

Hackaday: Optical Tweezers Investigate Tiny Particles

No matter how small you make a pair of tweezers, there will always be things that tweezers aren’t great at handling. Among those are various fluids, and especially aerosolized droplets, which can’t be easily picked apart and examined by a blunt tool like tweezers. For that you’ll want to reach for a specialized tool like this laser-based tool which can illuminate and manipulate tiny droplets and other particles.

[Janis]’s optical tweezers use both a 170 milliwatt laser from a DVD burner and a second, more powerful half-watt blue laser. Using these lasers a mist of fine particles, in this case glycerol, can be investigated for particle size among other physical characteristics. First, he looks for a location in a test tube where movement of the particles from convective heating the chimney effect is minimized. Once a favorable location is found, a specific particle can be trapped by the laser and will exhibit diffraction rings, or a scattering of the laser light in a specific way which can provide more information about the trapped particle.

Admittedly this is a niche tool that might not get a lot of attention outside of certain interests but for those working with proteins, individual molecules, measuring and studying cells, or, like this project, investigating colloidal particles it can be indispensable. It’s also interesting how one can be built largely from used optical drives, like this laser engraver that uses more than just the laser, or even this scanning laser microscope.

Slashdot: Pareto's Economic Theories Used To Find the Best Mario Kart 8 Racer

Read more of this story at Slashdot.

Slashdot: Apple Acquires Datakalab, a French Startup Behind AI and Computer Vision Tech

Read more of this story at Slashdot.

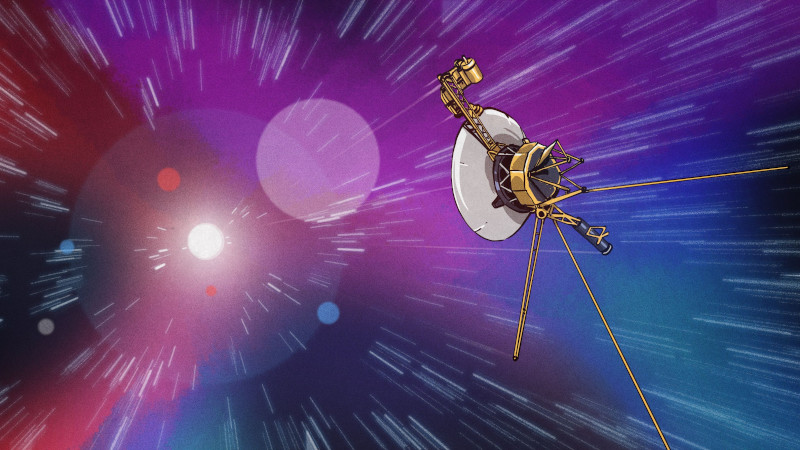

Hackaday: NASA’s Voyager 1 Resumes Sending Engineering Updates to Earth

After many tense months, it seems that thanks to a gaggle of brilliant engineering talent and a lucky break the Voyager 1 spacecraft is once more back in action. Confirmation came on April 20th, when Voyager 1 transmitted its first data since it fell silent on November 14 2023. As previously suspected, the issue was a defective memory chip in the flight data system (FDS), which among other things is responsible for preparing the data it receives from other systems before it is transmitted back to Earth. As at this point in time Voyager 1 is at an approximate 24 billion kilometers distance, this made for a few tense days for those involved.

The firmware patch that got sent over on April 18th contained an initial test to validate the theory, moving the code responsible for the engineering data packaging to a new spot in the FDS memory. If the theory was correct, this should mean that this time the correct data should be sent back from Voyager. Twice a 22.5 hour trip and change through Deep Space and back later on April 20th the team was ecstatic to see what they had hoped for.

With this initial test successful, the team can now move on to moving the remaining code away from the faulty memory after which regular science operations should resume, and giving the plucky spacecraft a new lease on life at the still tender age of 46.

Colossal: Hot Dogs, Rats, and Birkin Bags: Paa Joe’s Wooden Coffins Are an Ode to NYC’s Ubiquitous Sights

“Yellow Cab” (2024), Emele wood, enamel, cloth, acrylic, 92 x 27 x 44 inches. All images courtesy of Superhouse, shared with permission

New Yorkers are known for their unwavering devotion to the city, but would they want to spend eternity inside one of its once-ubiquitous taxis or worse yet, in the body of a wildly resilient subway rat?

In Celestial City at Superhouse, Ghanaian artist Paa Joe presents a sculptural ode to the Big Apple by carving an oversized rendition of the fruit, a Heinz ketchup bottle, a bagel with schmear, and more urban icons. Invoking the charms of all five boroughs, the painted wooden works open up to reveal the soft, padded insides of coffins, and two—the car and condiment—are even fit for humans.

Installation view of ‘Celestial City’

Since 1960, Paa Joe has been crafting caskets, which are known as abeduu adeka or proverb boxes to the Ga people, a community to which the artist belongs. Coffins are a crucial component to the safe passage of the dead to the afterlife and a family tradition for Paa Joe. A statement says:

In the early 1950s, Paa Joe’s uncle, Kane Kwei pioneered the first figurative coffin, a cocoa pod intended for a chief as a ceremonial palanquin. When the chief passed away during its construction, it was repurposed as his coffin. This innovative art form quickly gained popularity, and Kane Kwei began creating bespoke commissions resembling living and inanimate objects, symbolizing the deceased individual’s identity (an onion for a farmer, an eagle for a community leader, a sardine for a fisherman, etc.).

He continues this legacy today with his Fantasy Coffins series. In addition to the New York tributes, his works include a Campbell’s soup can, an Air Jordan sneaker, fish, and fruit. The sculptures often exaggerate scale, including the diminutive Statue of Liberty and a gigantic hot dog that shift perspectives on the quotidian.

Celestial City is on view through April 27. For a glimpse into Paa Joe’s carving process, visit Instagram.

Detail of “Sabrett” (2023), Emele wood, enamel, cloth, 23. 6 x 16. 5 x 11 inches

“Sabrett” (2023), Emele wood, enamel, cloth, 23. 6 x 16. 5 x 11 inches

Left: “Subway Rat” (2023), Emele wood, enamel, 24. 4 x 12. 6 x 11. 8 inches. Right: “Heinz” (2024), Emele wood, enamel , cloth, 26. 5 x 22. 5 x 94 inches

“Guggenheim” (2024), Emele wood, enamel, cloth, 29 x 22. 5 x 26. 5 inches

Detail of “Guggenheim” (2024), Emele wood, enamel, cloth, 29 x 22. 5 x 26. 5 inches

Detail of “Big Apple” (2024), Emele wood, enamel, artificial leaves, 19. 5 D x 26. 5 inches

Installation view of ‘Celestial City’

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Hot Dogs, Rats, and Birkin Bags: Paa Joe’s Wooden Coffins Are an Ode to NYC’s Ubiquitous Sights appeared first on Colossal.

Penny Arcade: Cyberyuck

We saw a Cybertruck in the wild when we were coming back from a funeral. It bore a kind of gentle symmetry, because Elon Musk will be buried beneath one figuratively and possibly literally because of how the gas pedal can slide off and get stuck under a manifold, locking the pedal into its highest level of push-downedness. It's fine, though - the thirty-eight hundred or so cybertrucks out in the wild are being brought in to have the footplate pop-riveted in, like they were shoeing a horse.

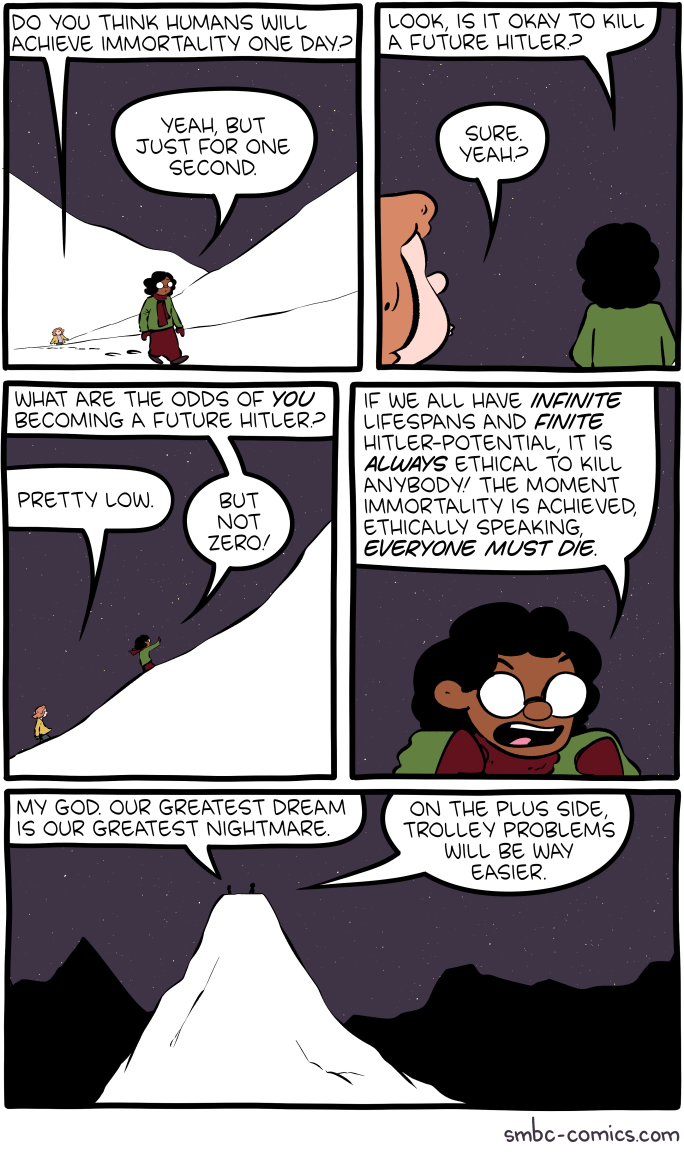

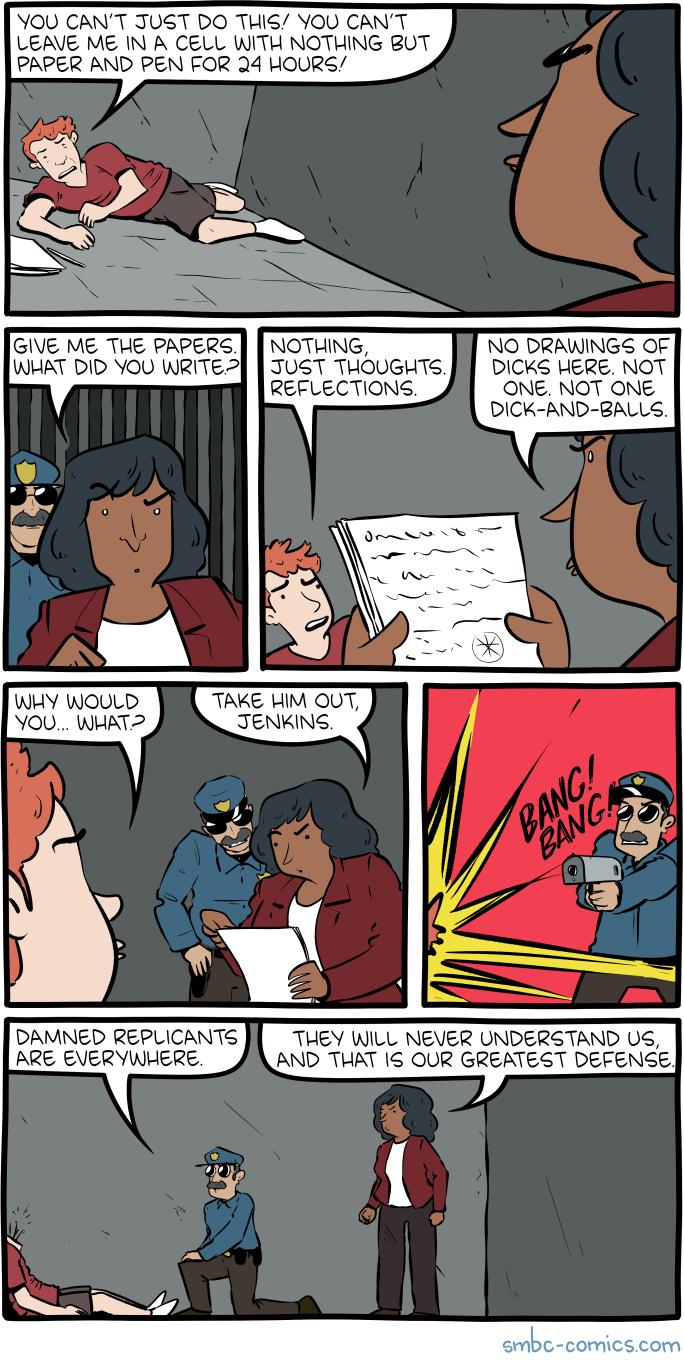

Saturday Morning Breakfast Cereal: Saturday Morning Breakfast Cereal - Immortal

Click here to go see the bonus panel!

Hovertext:

When you add in the Stalin potential it gets really dicey.

Today's News:

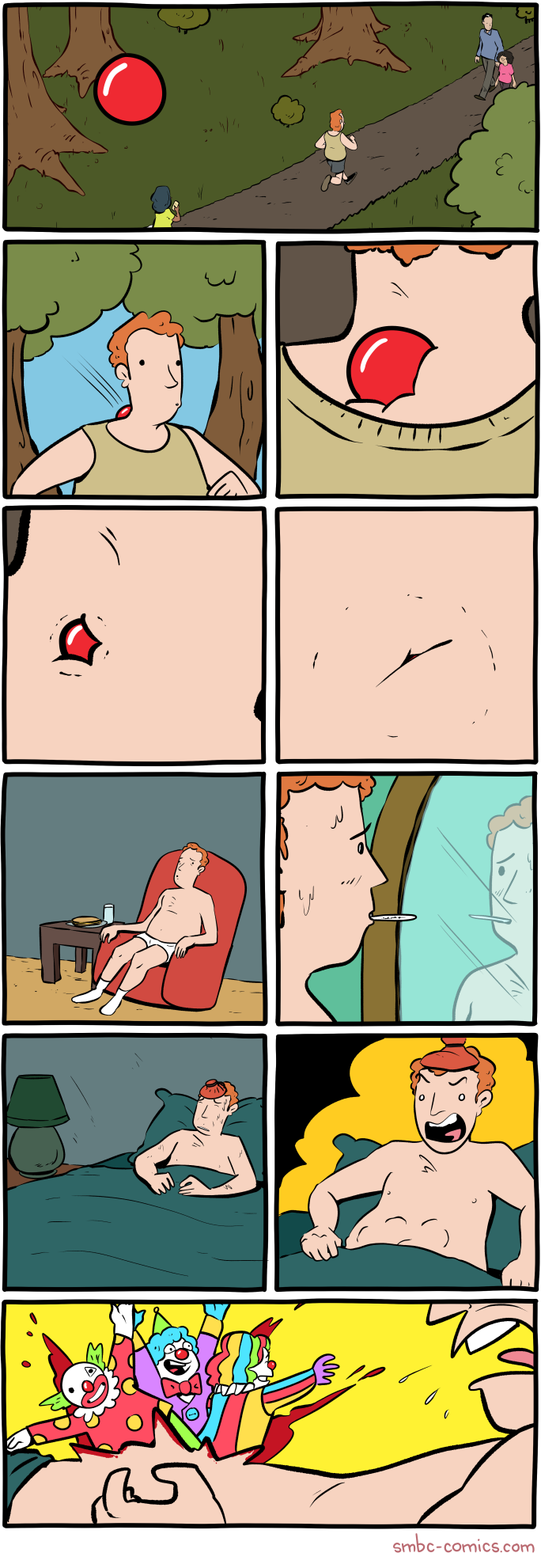

Saturday Morning Breakfast Cereal: Saturday Morning Breakfast Cereal - Good News

Click here to go see the bonus panel!

Hovertext:

The silver lining is due to cesium contamination.

Today's News:

new shelton wet/dry: ‘The old world is dying, and the new world struggles to be born: now is the time of monsters.’ –Antonio Gramsci

We do not have a veridical representation of our body in our mind. For instance, tactile distances of equal measure along the medial-lateral axis of our limbs are generally perceived as larger than those running along the proximal-distal axis. This anisotropy in tactile distances reflects distortions in body-shape representation, such that the body parts are perceived as wider than they are. While the origin of such anisotropy remains unknown, it has been suggested that visual experience could partially play a role in its manifestation.

To causally test the role of visual experience on body shape representation, we investigated tactile distance perception in sighted and early blind individuals […] Overestimation of distances in the medial-lateral over proximal-distal body axes were found in both sighted and blind people, but the magnitude of the anisotropy was significantly reduced in the forearms of blind people.

We conclude that tactile distance perception is mediated by similar mechanisms in both sighted and blind people, but that visual experience can modulate the tactile distance anisotropy.

new shelton wet/dry: from swerve of shore to bend of bay

Do you surf yourself?

No, I tried. I did it for about a week, 20 years ago. You have to dedicate yourself to these great things. And I don’t believe in being good at a lot of things—or even more than one. But I love to watch it. I think if I get a chance to be human again, I would do just that. You wake up in the morning and you paddle out. You make whatever little money you need to survive. That seems like the greatest life to me.

Or you could become very wealthy in early middle-age, stop doing the hard stuff, and go off and become a surfer.

No, no. You want to be broke. You want it to be all you’ve got. That’s when life is great. People are always trying to add more stuff to life. Reduce it to simpler, pure moments. That’s the golden way of living, I think.

related { Anecdote on Lowering the work ethic }

Greater Fool – Authored by Garth Turner – The Troubled Future of Real Estate: The 2-handle

To buy a house costing $2 million takes courage. And cash. Lots of it, including a 20% downpayment and a fat income. After putting $400,000 down and paying $73,000 in land transfer tax (in Toronto), the monthly mortgage nut is $10,300 (at 5.6%, five-year, 25-am). Plus property tax, insurance, utilities and upkeep. After shelling out $440,000 in interest over sixty months, you’d still owe $1.5 million.

By the way, this would require earnings of around $400,000 to qualify for financing. The average household income in T.O. is currently $110,000. Over 90% of people don’t make the cut.

So, how is this even possible? RBC already told us properties costing just over half this amount are severely unaffordable. The worst ever. Even when mortgages were 15% or more.

But wait. Look at what the house-humping Zoocasa site is claiming. The average house (not necessarily a nice one), it finds, will be at or above the $2 million mark by 2034. Ten years. So if you start today, kids, you only need to save $40,000 a year (and get a job as a bank CFO) to join in.

As rates fall, the Z people correctly point out, real estate rises in cost. “In the case that rates do begin declining this year, we can anticipate a corresponding price increase in the market overall, meaning we can reach this multimillion-dollar average home value even faster.”

Now, for the record, the sale price of a detached in 416 has touched $2 million briefly during a post-Covid Spring market. But it’s now retreated to the $1.7 million range. Of course, in many hoods $2 million continues to be merely the entry point. Rosedale is close to $4 million on average. Even the cheek-by-jowl mini McMansions of Leaside are routinely north of $2.5 million. The local real estate board stats show forty per cent of the entire city is in the two-mill zone. So, can prices actually migrate north everywhere?

Depends. If the economy stays positive and unemployment doesn’t spike (no recession) any interest rate declines will likely bring out more buyers willing to take the plunge. Meanwhile governments have been priming the pump. The latest dumb moves came from the feds, who have greenlit 30-year mortgages on new construction and bloated the RRSP homebuyer grab to $120,000 per couple.

Concurrently, Ottawa has seriously upped the capital gains inclusion rate on every investment asset save residential real estate. So, guess where more bucks will be flowing in the future? More dumbness.

Well, what’s the current thinking on rates?

Here are the expectations using the implied Canadian Dollar Offered Rate (CDOR) movements and probabilities based on BAX prices. In other words, what does Mr. Market think Tiff is gonna do?

The chances of a first cut of 25 basis points occurring in June sit at 74%. So, best plan on that happening. Further out, the betting is 50% that another quarter point will be shaved off in September, bringing the bank rate down to 4.5% and the bank prime to 6.7%.

By March of 2025 there’s currently an 86% chance the CB will slice another quarter point off, and a full-point drop (to 4%) will not occur until the autumn of next year (94% odds). So, clearly, rate expectations have been trimmed as the world steeps in volatility and, especially, as the US economy outperforms expectations.

This means the Fed will be higher for longer, while our CB lowers first to head off negative economic growth. As boss Jerome Powell said last week. “The recent data have clearly not given us greater confidence, and instead indicate that it’s likely to take longer than expected to achieve that confidence”. Just months ago the consensus of economists was for 125 bps of easing this year. That has now turned into just 40.

So, Mr. Market expects no change in June (83%), no change in July (57%), and maybe quarter-point drop in September (46%) and again in November (42%).

The American economy has surprised everyone, with 3% growth, full employment, rebounding profitability and over 20 new record stock market highs. Inflation is running hotter than in Canada and there’s consequently less pressure on the central bank. Complicating things is that weird presidential election – making Powell very cautious about any move that may be seen as political.

In short, it’s inevitable rates will drop. But not quite yet. Canada is also expected to see lower lending costs first. Combined with government desperation to encourage buyers, increase demand and push investment bucks from financials to real estate, the case for more house-buying remains strong.

And that sucks.

About the picture: “The “ferocious beast” picture in Thailand that you posted last week,” writes Alan, “prompted me to offer this picture taken outside a dog boutique (Feine pfote = Fine paws) in Linz, Austria while on our recent Rhine cruise (paid for out of our GT inspired b&d portfolio!) Thanks for the great daily reads.”

To be in touch or send a pisture of your beast, email to ‘garth@garth.ca’.

The Universe of Discourse: Talking Dog > Stochastic Parrot

I've recently needed to explain to nontechnical people, such as my chiropractor, why the recent ⸢AI⸣ hype is mostly hype and not actual intelligence. I think I've found the magic phrase that communicates the most understanding in the fewest words: talking dog.

These systems are like a talking dog. It's amazing that anyone could train a dog to talk, and even more amazing that it can talk so well. But you mustn't believe anything it says about chiropractics, because it's just a dog and it doesn't know anything about medicine, or anatomy, or anything else.

For example, the lawyers in Mata v. Avianca got in a lot of trouble when they took ChatGPT's legal analysis, including its citations to fictitious precendents, and submitted them to the court.

“Is Varghese a real case,” he typed, according to a copy of the exchange that he submitted to the judge.

“Yes,” the chatbot replied, offering a citation and adding that it “is a real case.”

Mr. Schwartz dug deeper.

“What is your source,” he wrote, according to the filing.

“I apologize for the confusion earlier,” ChatGPT responded, offering a legal citation.

“Are the other cases you provided fake,” Mr. Schwartz asked.

ChatGPT responded, “No, the other cases I provided are real and can be found in reputable legal databases.”

It might have saved this guy some suffering if someone had explained to him that he was talking to a dog.

The phrase “stochastic parrot” has been offered in the past. This is completely useless, not least because of the ostentatious word “stochastic”. I'm not averse to using obscure words, but as far as I can tell there's never any reason to prefer “stochastic” to “random”.

I do kinda wonder: is there a topic on which GPT can be trusted, a non-canine analog of butthole sniffing?

Addendum

I did not make up the talking dog idea myself; I got it from someone else. I don't remember who.

Planet Haskell: Mark Jason Dominus: Talking Dog > Stochastic Parrot

I've recently needed to explain to nontechnical people, such as my chiropractor, why the recent ⸢AI⸣ hype is mostly hype and not actual intelligence. I think I've found the magic phrase that communicates the most understanding in the fewest words: talking dog.

These systems are like a talking dog. It's amazing that anyone could train a dog to talk, and even more amazing that it can talk so well. But you mustn't believe anything it says about chiropractics, because it's just a dog and it doesn't know anything about medicine, or anatomy, or anything else.

For example, the lawyers in Mata v. Avianca got in a lot of trouble when they took ChatGPT's legal analysis, including its citations to fictitious precendents, and submitted them to the court.

“Is Varghese a real case,” he typed, according to a copy of the exchange that he submitted to the judge.

“Yes,” the chatbot replied, offering a citation and adding that it “is a real case.”

Mr. Schwartz dug deeper.

“What is your source,” he wrote, according to the filing.

“I apologize for the confusion earlier,” ChatGPT responded, offering a legal citation.

“Are the other cases you provided fake,” Mr. Schwartz asked.

ChatGPT responded, “No, the other cases I provided are real and can be found in reputable legal databases.”

It might have saved this guy some suffering if someone had explained to him that he was talking to a dog.

The phrase “stochastic parrot” has been offered in the past. This is completely useless, not least because of the ostentatious word “stochastic”. I'm not averse to using obscure words, but as far as I can tell there's never any reason to prefer “stochastic” to “random”.

I do kinda wonder: is there a topic on which GPT can be trusted, a non-canine analog of butthole sniffing?

Addendum

I did not make up the talking dog idea myself; I got it from someone else. I don't remember who.

Penny Arcade: Everything We Know about May/June Sticker Packs

I saw a Cybertruck in real life for the first time a few days ago. That is the ugliest vehicle I’ve ever seen and I can remember when people were buying the PT Cruiser. I can’t imagine a normal, human person seeing that monstrosity and thinking “That’s the truck for me!” What I’m saying is, Cybertruck owners don’t deserve rights.

Schneier on Security: Using Legitimate GitHub URLs for Malware

Interesting social-engineering attack vector:

McAfee released a report on a new LUA malware loader distributed through what appeared to be a legitimate Microsoft GitHub repository for the “C++ Library Manager for Windows, Linux, and MacOS,” known as vcpkg.

The attacker is exploiting a property of GitHub: comments to a particular repo can contain files, and those files will be associated with the project in the URL.

What this means is that someone can upload malware and “attach” it to a legitimate and trusted project.

As the file’s URL contains the name of the repository the comment was created in, and as almost every software company uses GitHub, this flaw can allow threat actors to develop extraordinarily crafty and trustworthy lures.

For example, a threat actor could upload a malware executable in NVIDIA’s driver installer repo that pretends to be a new driver fixing issues in a popular game. Or a threat actor could upload a file in a comment to the Google Chromium source code and pretend it’s a new test version of the web browser.

These URLs would also appear to belong to the company’s repositories, making them far more trustworthy.

ScreenAnarchy: Sound And Vision: Luca Guadagnino

In the article series Sound and Vision we take a look at music videos from notable directors. This week we take a look at several music videos by Luca Guadagnino. Luca Guadagnino's films are vibrant and lush, pulsating with life and a heartbeat, like music. He is often one to curate his soundtracks carefully, with the likes of The Rolling Stones, Harry Nilsson, Captain Beefheart, Sufjan Stevens, Trent Reznor and Atticus Ross, Thom Yorke and John Adams showing up on his soundtracks. Sometimes he chooses pre-existing tracks, but more often he asks his favorite artists to provide the sonic backdrop for his films. It is fitting that a few of the music videos Guadagnino directed are for tracks tailor made for his own features, starting...

In the article series Sound and Vision we take a look at music videos from notable directors. This week we take a look at several music videos by Luca Guadagnino. Luca Guadagnino's films are vibrant and lush, pulsating with life and a heartbeat, like music. He is often one to curate his soundtracks carefully, with the likes of The Rolling Stones, Harry Nilsson, Captain Beefheart, Sufjan Stevens, Trent Reznor and Atticus Ross, Thom Yorke and John Adams showing up on his soundtracks. Sometimes he chooses pre-existing tracks, but more often he asks his favorite artists to provide the sonic backdrop for his films. It is fitting that a few of the music videos Guadagnino directed are for tracks tailor made for his own features, starting...

Colossal: Ewa Juszkiewicz’s Reimagined Historical Portraits of Women Scrutinize the Nature of Concealment

“Untitled (after Élisabeth Vigée Le Brun)” (2020), oil on canvas, 130 x 100 centimeters. All images © the artist, courtesy of Almine Rech, shared with permission

From elaborate hairstyles to hypertrophied mushrooms, an array of unexpected face coverings feature in Ewa Juszkiewicz’s portraits. Drawing on genteel likenesses of women primarily from the 18th and 19th centuries, the artist superimposes fabric, bouquets of fruit, foliage, and more, over the women’s faces.

In a collateral event during the 60th Annual Venice Biennale, presented by the Fundación Almine y Bernard Ruiz-Picasso and Almine Rech, Juszkiewicz presents a suite of works made between 2019 and 2024 that encapsulate her precise reconception of a popular Western genre. Locks with Leaves and Swelling Buds showcases her elaborate, technically accomplished pieces using traditional oil painting and varnishing techniques.

Juszkiewicz’s anonymous subjects are reminders of the systemic omission of women from the histories of art and the past more broadly. Literally in the face of portraits meant to memorialize and celebrate individuals, the artist erases their identities entirely, alluding only to the original artists’ names in the titles. In a seemingly contradictory approach, by drawing our attention to this erasure, Juszkiewicz stokes our curiosity about who they were.

“By covering the face of historical portraits, Juszkiewicz challenges the very essence of this genre: she destroys the portrait as such,” says curator Guillermo Solana. In a recent video, we get a peek inside the artist’s studio, where she describes how elements of another European painting tradition, the still life, proffers a rich well of symbolic objects to conceal each sitter’s face, from botanicals to ribbons to food.

Locks with Leaves and Swilling Buds continues in Venice at Palazzo Cavanis through September 1. Find more on the artist’s website and Instagram.

“Untitled (after François Gérard)” (2023), oil on canvas, 100 x 80 centimeters

“Untitled (after Joseph van Lerius)” (2020), oil on canvas, 70 x 55 centimeters

Left: “Portrait in Venetian Red (after Élisabeth-Louise Vigée Le Brun)” (2024), oil on canvas, 190 x 140 centimeters. Right: “Lady with a Pearl (after François Gérard)” (2024), oil on canvas, 80 x 65 centimeters

“Bird of paradise” (2023) oil on canvas, 200 x 160 centimeters. Photo by Serge Hasenböhler Fotografie

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Ewa Juszkiewicz’s Reimagined Historical Portraits of Women Scrutinize the Nature of Concealment appeared first on Colossal.

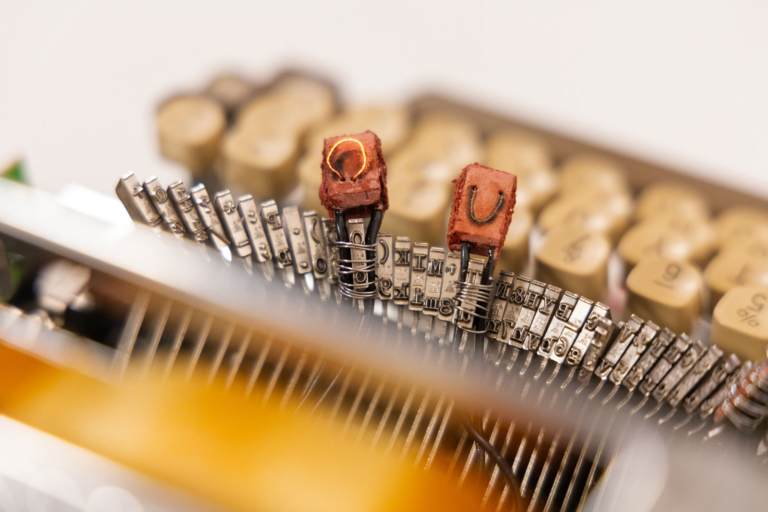

CreativeApplications.Net: Toaster-Typewriter – An investigation of humor in design

Toaster-Typewriter is the first iteration of what technology made with humor can do. A custom made machine that lets one burn letters onto bread, this hybrid appliance nudges users to exercise their imaginations while performing a mundane task like making toast in the morning.

Category: Objects

Tags: absurd / critique / device / eating / education / emotion / engineering / experience / experimental / machine / Objects / parsons / playful / politics / process / product design / reverse engineering / storytelling / student / technology / typewriter

People: Ritika Kedia

Michael Geist: The Law Bytes Podcast, Episode 200: Colin Bennett on the EU’s Surprising Adequacy Finding on Canadian Privacy Law

A little over five years ago, I launched the Law Bytes podcast with an episode featuring Elizabeth Denham, then the UK’s Information and Privacy Commissioner, who provided her perspective on Canadian privacy law. I must admit that I didn’t know what the future would hold for the podcast, but I certainly did not envision reaching 200 episodes. I think it’s been a fun, entertaining, and educational ride. I’m grateful to the incredible array of guests, to Gerardo Lebron Laboy, who has been there to help produce every episode, and to the listeners who regularly provide great feedback.

The podcast this week goes back to where it started with a look at Canadian privacy through the eyes of Europe. It flew under the radar screen for many, but earlier this year the EU concluded that Canada’s privacy law still provides an adequate level of protection for personal information. The decision comes as a bit of surprise to many given that Bill C-27 is currently at clause-by-clause review and there has been years of criticism that the law is outdated. To help understand the importance of the EU adequacy finding and its application to Canada, Colin Bennett, one of the world’s leading authorities on privacy and privacy governance, joins the podcast.

The podcast can be downloaded here, accessed on YouTube, and is embedded below. Subscribe to the podcast via Apple Podcast, Google Play, Spotify or the RSS feed. Updates on the podcast on Twitter at @Lawbytespod.

Show Notes:

Bennett, The “Adequacy” Test: Canada’s Privacy Protection Regime Passes, but the Exam Is Still On

EU Adequacy Finding, January 2024

Credits:

EU Reporter, EU Grants UK Data Adequacy for a Four Year Period

The post The Law Bytes Podcast, Episode 200: Colin Bennett on the EU’s Surprising Adequacy Finding on Canadian Privacy Law appeared first on Michael Geist.

Ideas: Salmon depletion in Yukon River puts First Nations community at risk

Once, there were half a million salmon in the Yukon River, but now they're almost gone. For the Little Salmon Carmacks River Nation, these salmon are an essential part of their culture — and now their livelihood is in peril. IDEAS shares their story as they struggle to keep their identity after the loss of the salmon migration.

Colossal: Diverse Expressions: 5 Artwork Themes to Discover at The Other Art Fair Brooklyn This May

Dawn Beckles, “Forever The Cream” (2023), acrylic, charcoal, gold leaf, spray paint, paper on canvas

Get ready for an art event unlike any other. The Other Art Fair presented by Saatchi Art returns to ZeroSpace in Brooklyn from May 16 to 19. With each new fair comes new experiences, and this edition is no different as it unveils a vibrant roster of fresh artwork, new talents, and unexpected delights.

This May installment promises an array of exciting features, including 120+ independent artists set to showcase their collections, the 10th edition of Mike Perry and Josh Cochran’s “Get Nude, Get Drawn” portrait experience, complimentary hand-crafted whisky cocktails (exclusively for those aged 21+) on Thursday’s Opening Night, and live performances on both Opening Night and the highly anticipated Friday Late soirée.

Ahead of the main event, we’re highlighting five categories of artists based on shared themes, offering a look at the diverse range of artistic practices at this year’s fair.

Still life

Bella Wattles fuses colorful objects, ceramics, and thrifted treasures into whimsical paintings that echo societal harmony and celebrate women and LGBTQ+ creators.

Still lifes by self-taught artist Dawn Beckles bridge the energies of London and the Caribbean. Her bold strokes celebrate the beauty of everyday moments, inviting viewers to reconnect with their own stories.

Bryane Broadie, “Mind Growth”

Celebrating Black Portraiture

Bryane Broadie, a graphic artist from Prince George’s County, Maryland, discovered his passion for art in elementary school. His digital and mixed media works reflect Black history and culture.

Inspired by childhood resourcefulness and Brooklyn’s cultural tapestry, Sean Qualls crafts evocative illustrations and paintings. His work investigates identity, history, the human spirit, and universal human experiences.

Carrie Lipscomb, “Blocks (small)” (2024), thread on fine art paper

Embossed and Embroidered

Guided by pure geometries, Brooklyn-based mixed-media artist Carrie Lipscomb explores space and texture, urging viewers to engage with the subtleties in found materials.

Sophie Reid is a visual storyteller who blends geometric shapes across multimedia. She honed her craft as a graphic designer and in her art, she uses illustrations and stitch works to echo her love for design and travel.

Isabella Bejarano, “Silver Water” (2015), fine art photography print in a custom aqua plexi shadowbox frame, limited edition of 25

New Mediums

Mounts in colored acrylic boxes: NYC-based Venezuelan-born photographer Isabella Bejarano champions environmental causes through her sustainable fine art prints, donating proceeds to combat climate change.

Film lightboxes: Montreal-based artist and film aficionado Hugo Cantin creates patterned collages on vintage film stock. Housed in light boxes, his illuminated creations fuse sophisticated design with historical narratives.

Patrick Skals, “THE WRITING IS ON THE WALL” (2023), acrylic, ink, and watercolor on canvas

Text

Toronto artist Patrick Skals escaped the corporate world in 2019 to pursue his unique vision. His collection, In Other Words, aims to disrupt norms with abstract commentary, challenging viewers to be introspective.

Kelli Kikcio, an artist from Toronto now based in Brooklyn, takes a hands-on approach in her work, which reflects her commitment to social justice and community engagement.

See work by all these artists and more at The Other Art Fair’s Brooklyn edition, on view from May 16 to 19.

Grab your tickets for an unforgettable weekend at theotherartfair.com.

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Diverse Expressions: 5 Artwork Themes to Discover at The Other Art Fair Brooklyn This May appeared first on Colossal.

Open Culture: Humans First Started Enjoying Cannabis in China Circa 2800 BC

Judging by how certain American cities smell these days, you’d think cannabis was invented last week. But that spike in enthusiasm, as well as in public indulgence, comes as only a recent chapter in that substance’s very long history. In fact, says the presenter of the PBS Eons video above, humanity began cultivating it “in what’s now China around 12,000 years ago. This makes cannabis one of the single oldest known plants we domesticate,” even earlier than “staples like wheat, corn, and potatoes.” By that time scale, it wasn’t so long ago — four millennia or so — that the lineages used for hemp and for drugs genetically separated from each other.

The oldest evidence of cannabis smoking as we know it, also explored in the Science magazine video below, dates back 2,500 years. “The first known smokers were possibly Zoroastrian mourners along the ancient Silk Road who burned pot during funeral rituals,” a proposition supported by the analysis of the remains of ancient braziers found at the Jirzankal cemetery, at the foot of the Pamir mountains in western China. “Tests revealed chemical compounds from cannabis, including the non-psychoactive cannabidiol, also known as CBD” — itself reinvented in our time as a thoroughly modern product — and traces of a THC byproduct called cannabinol “more intense than in other ancient samples.”

What made the Jirzankal cemetery’s stash pack such a punch? “The region’s high altitude could have stressed the cannabis, creating plants naturally high in THC,” writes Science’s Andrew Lawler. “But humans may also have intervened to breed a more wicked weed.” As cannabis-users of the sixties and seventies who return to the fold today find out, the weed has grown wicked indeed over the past few decades. But even millennia ago and half a world away, civilizations that had incorporated it for ritualistic use — or as a medical treatment — may already have been agriculturally guiding it toward greater potency. Your neighborhood dispensary may not be the most sublime place on Earth, but at least, when next you pay it a visit, you’ll have a sound historical reason to cast your mind to the Central Asian steppe.

Related content:

The Drugs Used by the Ancient Greeks and Romans

Algerian Cave Paintings Suggest Humans Did Magic Mushrooms 9,000 Years Ago

Pipes with Cannabis Traces Found in Shakespeare’s Garden, Suggesting the Bard Enjoyed a “Noted Weed”

Reefer Madness, 1936’s Most Unintentionally Hilarious “Anti-Drug” Exploitation Film, Free Online

Carl Sagan on the Virtues of Marijuana (1969)

Watch High Maintenance: A Critically-Acclaimed Web Series About Life & Cannabis

The New Normal: Spike Jonze Creates a Very Short Film About America’s Complex History with Cannabis

Based in Seoul, Colin Marshall writes and broadcasts on cities, language, and culture. His projects include the Substack newsletter Books on Cities, the book The Stateless City: a Walk through 21st-Century Los Angeles and the video series The City in Cinema. Follow him on Twitter at @colinmarshall or on Facebook.

Penny Arcade: Cyberyuck

TOPLAP: It’s a big week for live coding in France!

Last week it was /* VIU */ in Barcelona, this week it’s France!

Events

- Live Coding Study Day April 23, 2024

Organizers: Raphaël Forment, Agathe Herrou, Rémi Georges- 2 Academic sessions

- Speakers: Julian Rohrhuber, Yann Orlarey, and Stéphane Letz

- Evening concert, featuring performances selected by Artistic Director Rémi Georges balancing originality with a mix of local French and international artists. Lineup: Jia Liu (GER), Bruno Gola (Brazil/Berlin), Adel Faure (FR), ALFALFL (FR), Flopine (FR).

“While international networks centered on live-coding have been established for nearly 20 years through the TOPLAP collective, no academic event has ever taken place in France on this theme. The goal of this study day is to put in motion a national research network connected to its broader european and international counterparts.”

- Algorave in Lyon April 27 – 28 (12 hrs!) Live Streamed to YouTube.com/eulerroom

The 12 hour marathon Algorave is emerging as a unique French specialty (or maybe they just enjoy self-inflicted exhaustion…) Last year was a huge success so the team is back at it for more! 24 sound and/or visual artists including: th4, zOrg, Raphaël Bastide, Crash Server, Eloi el bon Noi, Adel Faure, Fronssons, QBRNTHSS, Bubobubobubo, azertype, eddy flux, + many more.

Rave on!

Disquiet: Pre-Show (Bill Frisell & Co.)

As I type this, I’m preparing to drive over to Berkeley, from San Francisco, to see guitarist Bill Frisell in a sextet that will be premiering new music. The group, who will play at Freight & Salvage, consists of Frisell plus violinist Jenny Scheinman, violist Eyvind Kang, cellist Hank Roberts, bassist Thomas Morgan, and drummer Rudy Royston.

There is, as far as I can tell, no available footage or audio of them playing as a group, so I’ve been piecing together a mental sonic image, as it were, from various smaller group settings.

These two short videos are all the strings from the sextet excepting the bass, filmed back on November 4, 2017. It’s the same group (Frisell, Sheinman, Kang, Roberts) who recorded the 2011 album Sign of Life (Savoy) and the 2005 album Richter 858 (Songlines). The latter was recorded back in 2002, so this is no new partnership by any means.

Roberts has, I believe, with Frisell, the longest-running association of all the musicians playing in the premiere. There’s plenty of examples, both commercial releases and live video, including this short piece, recorded June 15, 2014, at the New Directions Cello Festival, at Ithaca College, in Ithaca, New York.

Roberts was one of the first musicians I interviewed professionally, shortly after I got out of college in 1988. By then I had interviewed numerous musicians for a school publication, including the drummer Bill Bruford (Yes, King Crimson) and the Joseph Shabalala (founder of the vocal group Ladysmith Black Mambazo). After school I moved to New York City (first Manhattan and then Brooklyn), and for a solid swath of that time I was lucky to score a shared apartment on Crosby Street just south of Houston, incredibly close to the Knitting Factory, where I went several times a week and saw Frisell, Roberts, and so many “Downtown” musicians of that era in each other’s groups. I also saw Frisell play at the Village Vanguard around that time, but mostly just went to whatever was at the Knitting Factory on a given night. When I interviewed Roberts, it was on the subject of his then fairly new record, Black Pastels. (I wrote the piece for Pulse! magazine, published by Tower Records. In 1989 I moved to California to be an editor at Pulse!)

Frisell, bassist Morgan, and drummer Royston have recorded and toured widely and frequently in recent years. Here they are on July 3, 2023, at Arts Center at Duck Creek.

I’m imagining tonight’s music will have the “chamber Americana” quality of the quartet heard above, but the presence of Royston may rev things up a little, and it may have more of a jazz quality, closer to the trio work highlighted here.

The Shape of Code: Relative sizes of computer companies

How large are computer companies, compared to each other and to companies in other business areas?

Stock market valuation is a one measure of company size, another is a company’s total revenue (i.e., total amount of money brought in by a company’s operations). A company can have a huge revenue, but a low stock market valuation because it makes little profit (because it has to spend an almost equally huge amount to produce that income) and things are not expected to change.

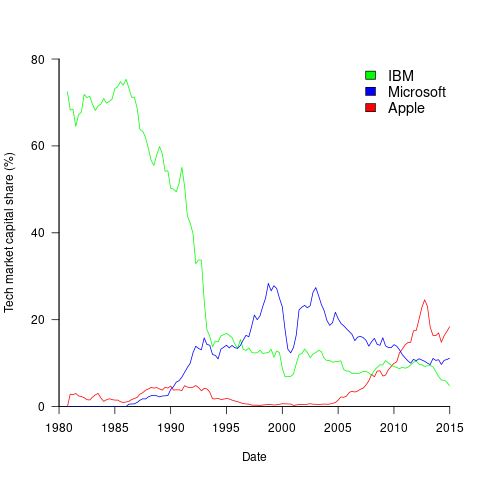

The plot below shows the stock market valuation of IBM/Microsoft/Apple, over time, as a percentage of the valuation of tech companies on the US stock exchange (code+data on Github):

The growth of major tech companies, from the mid-1980s caused IBM’s dominant position to dramatically decline, while first Microsoft, and then Apple, grew to have more dominant market positions.

Is IBM’s decline in market valuation mirrored by a decline in its revenue?

The Fortune 500 was an annual list of the top 500 largest US companies, by total revenue (it’s now a global company list), and the lists from 1955 to 2012 are available via the Wayback Machine. Which of the 1,959 companies appearing in the top 500 lists should be classified as computer companies? Lacking a list of business classification codes for US companies, I asked Chat GPT-4 to classify these companies (responses, which include a summary of the business area). GPT-4 sometimes classified companies that were/are heavy users of computers, or suppliers of electronic components as a computer company. For instance, I consider Verizon Communications to be a communication company.

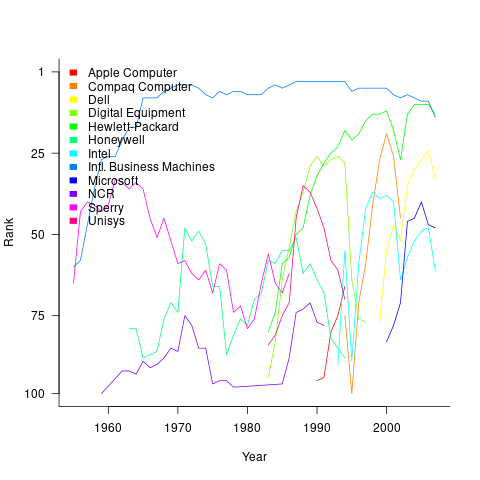

The plot below shows the ranking of those computer companies appearing within the top 100 of the Fortune 500, after removing companies not primarily in the computer business (code+data):

IBM is the uppermost blue line, ranking in the top-10 since the late-1960s. Microsoft and Apple are slowly working their way up from much lower ranks.

These contrasting plots illustrate the fact that while IBM continued to a large company by revenue, its low profitability (and major losses) and the perceived lack of a viable route to sustainable profitability resulted in it having a lower stock market valuation than computer companies with much lower revenues.

new shelton wet/dry: shadow trading

No evidence for differences in romantic love between young adult students and non-students — The findings suggest that studies investigating romantic love using student samples should not be considered ungeneralizable simply because of the fact that students constitute the sample.

Do insects have an inner life? Crows, chimps and elephants: these and many other birds and mammals behave in ways that suggest they might be conscious. And the list does not end with vertebrates. Researchers are expanding their investigations of consciousness to a wider range of animals, including octopuses and even bees and flies. […] Investigations of fruit flies (Drosophila melanogaster) show that they engage in both deep sleep and ‘active sleep’, in which their brain activity is the same as when they’re awake. “This is perhaps similar to what we call rapid eye movement sleep in humans, which is when we have our most vivid dreams, which we interpret as conscious experiences”

“This research shows the complexity of how caloric restriction affects telomere loss” After one year of caloric restriction, the participant’s actually lost their telomeres more rapidly than those on a standard diet. However, after two years, once the participants’ weight had stabilized, they began to lose their telomeres more slowly.

”It would mean that two-thirds of the universe has just disappeared”

AI study shows Raphael painting was not entirely the Master’s work

I bought 300 emoji domain names from Kazakhstan and built an email service [2021]

Shadow trading is a new type of insider trading that affects people who deal with material nonpublic information (MNPI). Insider trading involves investment decisions based on some kind of MNPI about your own company; shadow trading entails making trading decisions about other companies based on your knowledge of external MNPI. The issue has yet to be fully resolved in court, but the SEC is prosecuting this behavior. More: we provide evidence that shadow trading is an undocumented and widespread mechanism that insiders use to avoid regulatory scrutiny

The sessile lifestyle of acorn barnacles makes sexual reproduction difficult, as they cannot leave their shells to mate. To facilitate genetic transfer between isolated individuals, barnacles have extraordinarily long penises. Barnacles probably have the largest penis-to-body size ratio of the animal kingdom, up to eight times their body length

Daniel Lemire's blog: How do you recognize an expert?

Go back to the roots: experience. An expert is someone who has repeatedly solved the concrete problem you are encountering. If your toilet leaks, an experienced plumber is an expert. An expert has a track record and has had to face the consequences of their work. Failing is part of what makes an expert: any expert should have stories about how things went wrong.

I associate the word expert with ‘the problem’ because we know that expertise does not transfer well: a plumber does not necessarily make a good electrician. And within plumbing, there are problems that only some plumbers should solve. Furthermore, you cannot abstract a problem: you can study fluid mechanics all you want, but it won’t turn you into an expert plumber.

That’s one reason why employers ask for relevant experience: they seek expertise they can rely on. It is sometimes difficult to acquire expertise in an academic or bureaucratic setting because the problems are distant or abstract. Your experience may not translate well into practice. Sadly we live in a society where we often lose track of and undervalue genuine expertise… thus you may take software programming classes from people who never built software or civil engineering classes from people who never worked on infrastructure projects.

So… how do you become an expert? Work on real problems. Do not fall for reverse causation: if all experts dress in white, dressing in white won’t turn you into an expert. Listening to the expert is not going to turn you into an expert. Lectures and videos can be inspiring but they don’t build your expertise. Getting a job with a company that has real problems, or running your own business… that’s how you acquire experience and expertise.

Why would you want to when you can make a good living otherwise, without the hard work of solving real problems? Actual expertise is capital that can survive a market crash or a political crisis. After Germany’s defeat in 1945… many of the aerospace experts went to work for the American government. Relevant expertise is robust capital.

Why won’t everyone seek genuine expertise? Because there is a strong countervailing force: showing a total lack of practical skill is a status signal. Wearing a tie shows that you don’t need to work with your hands.

But again: don’t fall for reverse causality… broadcasting that you don’t have useful skills might be fun if you are already of high status… but if not, it may not grant you a higher status.

And status games without a solid foundation might lead to anxiety. If you can get stuff done, if you can fix problems, you don’t need to worry so much about what people say about you. You may not like the color of the shoes of your plumber, but you won’t snob him over it.

So get expertise and maintain it. You are likely to become more confident and happier.

Colossal: Street Photographer Tony Van Le Captures Beauty in Brevity

“The Magic Number.” All images © Tony Van Le, shared with permission

As John Koening writes in The Dictionary of Obscure Sorrows, the term sonder refers to “the realization that each random passerby is living a life as vivid and complex as your own—an epic story that continues invisibly around you.” This profound feeling reveals itself in Tony Van Le’s street photography, circling around the meaningful coincidences that he captures instantaneously.

Le is fueled by serendipity and strangeness. Having originally explored these grounds through music production, the artist has since moved toward manifesting a similar ethos through photography. Impassioned by the transient work of Vivian Maier, Le has spent years cultivating this calling, becoming more confident with focusing his lens on strangers over time. Purely candid and wondrously ephemeral, the artist approaches each street scene with a clear mind.

He explains, “When I’m out on the street, my mindset combines looking for the out-of-the-ordinary with trying to be a blank slate. By being fully attuned to the moment, I’m more open to the photographic potential of what I encounter.” Although fleeting, each snapshot is a reminder of the wonder that comes from being fully present and deeply sensitive toward life’s mundane charm.

Le has a number of ongoing projects, which you can find on his website. He will also be exhibiting at Gallery-O-Rama in July, so follow along on Instagram for updates.

“Into the Unknown”

“Man and Iguana”

“Metacognition”

“Woman with Mannequin on Motorbike”

“Diverse Pathways”

“Bicyclist and Steam”

“Among Us”

“Police Officer, Red-Tailed Hawk, and Rodent”

“Nature’s Crown”

Do stories and artists like this matter to you? Become a Colossal Member today and support independent arts publishing for as little as $5 per month. The article Street Photographer Tony Van Le Captures Beauty in Brevity appeared first on Colossal.

Saturday Morning Breakfast Cereal: Saturday Morning Breakfast Cereal - The Test

Click here to go see the bonus panel!

Hovertext:

Look it's the only test with no false negatives.

Today's News:

Trivium: 21apr2024

Building a GPS Receiver using RTL-SDR, by Phillip Tennen.

Reproducing EGA typesetting with LaTeX, using the Baskervaldx font.

The Solution of the Zodiac Killer’s 340-Character Cipher, final, comprehensive report on the project by David Oranchak, Sam Blake, and Jarl Van Eycke.

Bridging brains: exploring neurosexism and gendered stereotypes in a mindsport, by Samantha Punch, Miriam Snellgrove, Elizabeth Graham, Charlotte McPherson, and Jessica Cleary.

Yotta is a minimalistic forth-like language bootstrapped from x86_64 machine code.

SSSL - Hackless SSL bypass for the Wii U, released one day after shutdown of the official Nintendo servers.

stagex, a container-native, full-source bootstrapped, and reproducible toolchain.

Computing Adler32 Checksums at 41 GB/s, by wooosh.

Random musings on the Agile Manifesto

doom-htop, “Ever wondered whether htop could be used to render the graphics of cult video games?”

A proper cup of tea, try this game!

Greater Fool – Authored by Garth Turner – The Troubled Future of Real Estate: The Sharia scare

“I’m Muslim,” Sam said. “So I can’t.”

We were building him a retirement portfolio a few years ago. Sam told me all about riba. Also why he couldn’t own any bonds, have a regular savings account or be talked into a GIC by TNL@TB.

He explained that Muslims who adhere to Islamic law have a different relationship with money. It’s not an asset all on its own, he told me. Instead money’s just a way of measuring things – like the value of work or the cost of a bungalow in Brantford. When you look at currency like that, it’s unethical (and wrong) to receive interest income from money alone. It’s called riba, and Islamic law forbids it.

So this is why Sam couldn’t own a bond paying interest semi-annually, or a GIC that dumped an earned amount into his lap each year. Or even a bank savings account earning a piddling little amount. He had one of those, he told me, but because riba cannot be used for personal benefit, he donated those dollars to the mosque.

We solved the portfolio thing easily. Bond ETFs don’t pay interest, but dividends which represent the earned amount and any capital gain. For tax purposes these are treated either as income or gains, and therefore comply with religious tenets.

But here was Sam’s real problem: getting a mortgage.

Home loans come with a big interest component – in fact, in the early years of amortization it’s the largest component of monthly payments. For a few years Muslim-friendly mortgages have been available from a few lenders, but they’ve been scarce and expensive, owing partly to the fact Muslims don’t believe in foreclosures, either. So the cost of a ‘halal’ loan (that means ’permitted’) has been about 4% more than non-believers pay for bank mortgages.

That brings us to Tuesday last. Ottawa’s federal budget is, “exploring new measures to expand access to halal mortgages” and other non-traditional borrowings.

Well, guess what happened next?

“Trudeau bringing Sharia law to Canada!” screamed the first of a slew of comments posted to this blog (all deleted). Soon social media was more of a dank, poisonous swamp than usual. The extreme elements of historical Sharia law (lashings, stonings, amputation for relatively minor crimes plus the subjugation of women) were bandied about as if soon to come to your hood. And people wondered why, if Muslims can get mortgages without interest being charged, the rest of us can’t?

As usual, there were two things in the background. Ignorance. And political manipulation.

I’m no expert on religious laws, the Quran or Islam, but the idea behind halal mortgages seems pretty simple. The buyer still pays the lender, just in a different way and just as much (or more). In one form, the lender buys the property with the purchaser in a rent-to-own situation. Or buyer and lender enter into a partnership with ownership being transferred as lump sum payments are made. In any case, there’s a profit component built in for the finance company that equals what a traditional amortized mortgage would yield. Presumably the feds are looking to allow more lenders to get into the game by figuring out appropriate forms of CMHC insurance, now that Muslim families form a growing (5% or 1.7 million) hunk of the population.

So, a halal mortgage ain’t cheaper. Nobody gets a free pony.

But what we all get is a new way for the haters to express prejudice. Just as the MAGA adherents to the south have vilified immigrants (especially the brown ones) and as ‘Christian nationalism’ is embraced by Donald Trump, society grows darker. Sadly, the rightist and populist movements there, here and in Europe attract followers by seeking to turn the clock back, recalling a former, whiter, more comfortable and familiar world. And so the man who may become the most powerful person in the world can call newcomers to his country ‘vermin’ and ‘animals’, suggesting they are ‘poisoning’ America and its school system.

Never in a lifetime did we think this could happen. But here we are.

For the record, Muslim-friendly home loans are a nothingburger. They don’t give borrowers an advantage. They level the playing field. They do not mean Sharia law is being imposed, enacted or considered. You are not being threatened.

Also for the record, how can thinking about money this way be a bad thing? Value what it does, not what it is.

About the picture: “Your favourite millennial here,” writes Liam. “My wife and I just adopted Lenny here from a breeder on Vancouver Island. He’s adjusting to life in Montreal well, but, like his main man, struggles with the language, the sporadic street cleaning and joykill landlords.”

To be in touch or send a picture of your beast, email to ‘garth@garth.ca’.

Planet Haskell: Oleg Grenrus: A note about coercions

Safe coercions in GHC are a very powerful feature. However, they are not perfect; and already many years ago I was also thinking about how we could make them more expressive.

In particular such things like "higher-order roles" have been buzzing. For the record, I don't think Proposal #233 is great; but because that proposal is almost four years old, I don't remember why; nor I have tangible counter-proposal either.

So I try to recover my thoughts.

I like to build small prototypes; and I wanted to build a small language with zero-cost coercions.

The first approach, I present here, doesn't work.

While it allows model coercions, and very powerful ones, these coercions are not zero-cost as we will see. For language like GHC Haskell where being zero-cost is non-negotiable requirement, this simple approach doesn't work.

The small "formalisation" is in Agda file https://gist.github.com/phadej/5cf29d6120cd27eb3330bc1eb8a5cfcc

Syntax

We start by defining syntax. Our language is "simple": there are types

A, B = A -> B -- function type, "arrow"coercions

co = refl A -- reflexive coercion

| sym co -- symmetric coercions

| arr co₁ co₂ -- coercion of arrows built from codomain and domain

-- type coercionsand terms

f, t, s = x -- variable

| f t -- application

| λ x . t -- lambda abstraction

| t ▹ co -- castObviously we'd add more stuff (in particular, I'm interested in expanding coercion syntax), but these are enough to illustrate the problem.

Because the language is simple (i.e. not dependent), we can define typing rules and small step semantics independently.

Typing

There is nothing particularly surprising in typing rules.

We'll need a "well-typed coercion" rules too though, but these are also very straigh-forward

Coercion Typing: Δ ⊢ co : A ≡ B

------------------

Δ ⊢ refl A : A ≡ A

Δ ⊢ co : A ≡ B

------------------

Δ ⊢ sym co : B ≡ A

Δ ⊢ co₁ : C ≡ A

Δ ⊢ co₂ : D ≡ B

-------------------------------------

Δ ⊢ arr co₁ co₂ : (C -> D) ≡ (A -> B)Terms typing rules are using two contexts, for term and coercion variables (GHC has them in one, but that is unhygienic, there's a GHC issue about that). The rules for variables, applications and lambda abstractions are as usual, the only new is the typing of the cast:

Term Typing: Γ; Δ ⊢ t : A

Γ; Δ ⊢ t : A

Δ ⊢ co : A ≡ B

-------------------------

Γ; Δ ⊢ t ▹ co : B So far everything is good.

But when playing with coercions, it's important to specify the reduction rules too. Ultimately it would be great to show that we could erase coercions either before or after reduction, and in either way we'll get the same result. So let's try to specify some reduction rules.

Reduction rules

Probably the simplest approach to reduction rules is to try to inherit most reduction rules from the system without coercions; and consider coercions and casts as another "type" and "elimination form".

An elimination of refl would compute trivially:

t ▹ refl A ~~> tThis is good.

But what to do when cast's coercion is headed by arr?

t ▹ arr co₁ co₂ ~~> ???One "easy" solution is to eta-expand t, and split the coercion:

t ▹ arr co₁ co₂ ~~> λ x . t (x ▹ sym co₁) ▹ co₂We cast an argument before applying it to the function, and then cast the result. This way the reduction is type preserving.

But this approach is not zero-cost.

We could not erase coercions completely, we'll still need some indicator that there were an arrow coercion, so we'll remember to eta-expand:

t ▹ ??? ~~> λ x . t xConclusion

Treating coercions as another type constructor with cast operation being its elimination form may be a good first idea, but is not good enough. We won't be able to completely erase such coercions.

Another idea is to complicate the system a bit. We could "delay" coercion elimination until the result is scrutinised by another elimination form, e.g. in application case: